Howto setup a haproxy as fault tolerant / high available load balancer for multiple caching web proxies on RHEL/Centos/SL

February 12, 2012

As I didn’t find much documentation on the topic of setting up a load balancer for multiple caching web proxies, specially in a high availability way on common Linux enterprise distributions I sat down and wrote this howto.

If you’re working at a large organization, one web proxy will not be able to handle the whole load and you’ll also like some redundancy in case one proxy fails. A common setup is in this case to use the pac file to tell the client to use different proxies, for example one for .com addresses and one for all others, or a random value for each page request. Others use DNS round robin to balance the load between the proxies. In both cases you can remove one proxy node from the wheel for maintenances or of it goes down. But thats not done withing seconds and automatically. This howto will show you how to setup a haproxy with corosync and pacemaker on RHEL6, Centos6 or SL6 as TCP load balancer for multiple HTTP proxies, which does exactly that. It will be high available by itself and also recognize if one proxy does not accept connections anymore and will remove it automatically from the load balancing until it is back in operation.

The Setup

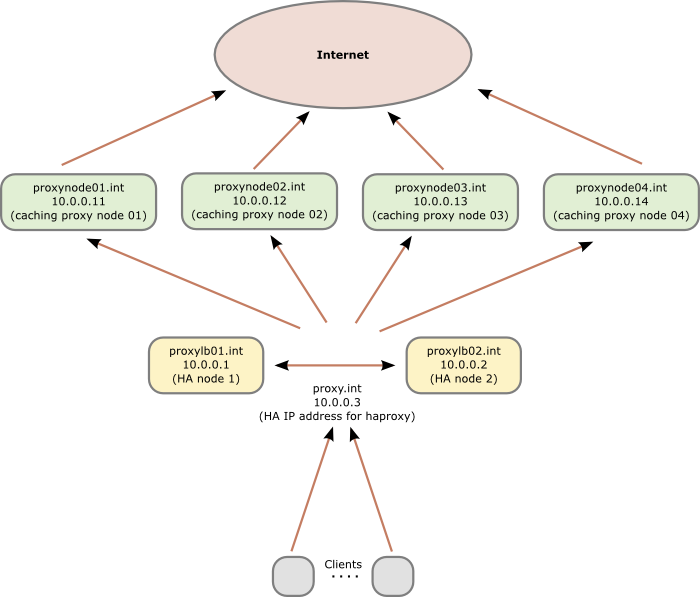

As many organizations will have appliances (which do much more than just caching the web) as their web proxies, I will show a setup with two additional servers (can be virtual or physical) which are used as load balancer. If you in your organization have normal Linux server as your web proxies you can of course use two or more also as load balancer nodes.

Following diagram shows the principle setup and the IP addresses and hostnames used in this howto:

Preconditions

As the proxies and therefore the load balancer are normally in the external DMZ we care about security and therefore we’ll check that Selinux is activated. The whole setup will run with SeLinux actived without changing anything. For this we take a look at /etc/sysconfig/selinux and verify that SELINUX is set to enforcing. Change it if not and reboot. You should also install some packages with

yum install setroubleshoot setools-console

and make sure all is running with

[root@proxylb01/02 ~]# sestatus

SELinux status: enabled

SELinuxfs mount: /selinux

Current mode: enforcing

Mode from config file: enforcing

Policy version: 24

Policy from config file: targeted

and

[root@proxylb01/02 ~]# /etc/init.d/auditd status

auditd (pid 1047) is running...

on both nodes. After this we make sure that our own host names are in the hosts files for security reasons and if the the DNS servers go down. The /etc/hosts file on both nodes should contain following:

10.0.0.1 proxylb01 proxylb01.int

10.0.0.2 proxylb02 proxylb02.int

10.0.0.3 proxy proxy.int

Software Installation and corosync setup

We need to add some additional repositories to get the required software. The package for haproxy is in the EPEL repositories. corosync and pacemaker are shipped as part of the distribution in Centos 6 and Scientific Linux 6, but you need the High Availability Addon for RHEL6 to get the packages.

Install all the software we need with

[root@proxylb01/02 ~]# yum install pacemaker haproxy

[root@proxylb01/02 ~]# chkconfig corosync on

[root@proxylb01/02 ~]# chkconfig pacemaker on

We use the example corsync config as starting point:

[root@proxylb01/02 ~]# cp /etc/corosync/corosync.conf.example /etc/corosync/corosync.conf

And we add following lines after the version definition line:

# How long before declaring a token lost (ms)

token: 5000

# How many token retransmits before forming a new configuration

token_retransmits_before_loss_const: 20

# How long to wait for join messages in the membership protocol (ms)

join: 1000

# How long to wait for consensus to be achieved before starting a new round of membership configuration (ms)

consensus: 7500

# Turn off the virtual synchrony filter

vsftype: none

# Number of messages that may be sent by one processor on receipt of the token

max_messages: 20

These values make the switching slower than default, but less trigger happy. This is required in my case as we’ve the machines running in VMware, where we use the snapshot feature to make backups and also move the VMware instances around. In both cases we’ve seen timeouts under high load of up to 4 seconds, normally 1-2 seconds.

Some lines later we’ve define the interfaces:

interface {

member {

memberaddr: 10.0.0.1

}

member {

memberaddr: 10.0.0.2

}

ringnumber: 0

bindnetaddr: 10.0.0.0

mcastport: 5405

ttl: 1

}

transport: udpu

We use the new unicast feature introduced in RHEL 6.2, if you’ve an older version you need to use the multicast method. Of course you can use the multicast method also with 6.2 and higher, I just didn’t see the purpose of it for 2 nodes. The configuration file /etc/corosync/corosync.conf is the same on both nodes so you can copy it.

Now we need to define pacemaker as our resource handler with following command:

[root@proxylb01/02 ~]# cat < <-END >>/etc/corosync/service.d/pcmk

service {

# Load the Pacemaker Cluster Resource Manager

name: pacemaker

ver: 1

}

END

Now we’ve ready to test-fly it and …

[root@proxylb01/02 ~]# /etc/init.d/corosync start

… do some error checking …

[root@proxylb01/02 ~]# grep -e "corosync.*network interface" -e "Corosync Cluster Engine" -e "Successfully read main configuration file" /var/log/messages

Feb 10 11:03:20 proxylb01/02 corosync[1691]: [MAIN ] Corosync Cluster Engine ('1.2.3'): started and ready to provide service.

Feb 10 11:03:20 proxylb01/02 corosync[1691]: [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

Feb 10 11:03:21 proxylb01/02 corosync[1691]: [TOTEM ] The network interface [10.0.0.1/2] is now up.

… and some more.

[root@proxylb01/02 ~]# grep TOTEM /var/log/messages

Feb 10 11:03:20 proxylb01/02 corosync[1691]: [TOTEM ] Initializing transport (UDP/IP Unicast).

Feb 10 11:03:20 proxylb01/02 corosync[1691]: [TOTEM ] Initializing transmit/receive security: libtomcrypt SOBER128/SHA1HMAC (mode 0).

Feb 10 11:03:21 proxylb01/02 corosync[1691]: [TOTEM ] The network interface [10.0.0.1/2] is now up.

Feb 10 11:03:21 proxylb01/02 corosync[1691]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

Feb 10 11:03:21 proxylb01/02 corosync[1691]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

Pacemaker setup

Now we need to check Pacemaker …

[root@proxylb01/02 ~]# grep pcmk_startup /var/log/messages

Feb 10 11:03:21 proxylb01/02 corosync[1691]: [pcmk ] info: pcmk_startup: CRM: Initialized

Feb 10 11:03:21 proxylb01/02 corosync[1691]: [pcmk ] Logging: Initialized pcmk_startup

Feb 10 11:03:21 proxylb01/02 corosync[1691]: [pcmk ] info: pcmk_startup: Maximum core file size is: 18446744073709551615

Feb 10 11:03:21 proxylb01/02 corosync[1691]: [pcmk ] info: pcmk_startup: Service: 10

Feb 10 11:03:21 proxylb01/02 corosync[1691]: [pcmk ] info: pcmk_startup: Local hostname: proxylb01/02.int

… and start it …

[root@proxylb01/02 ~]# /etc/init.d/pacemaker start

Starting Pacemaker Cluster Manager: [ OK ]

… and do some more error checking:

[root@proxylb01/02 ~]# grep -e pacemakerd.*get_config_opt -e pacemakerd.*start_child -e "Starting Pacemaker" /var/log/messages

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1709]: info: get_config_opt: Found 'pacemaker' for option: name

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1709]: info: get_config_opt: Found '1' for option: ver

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1709]: info: get_config_opt: Defaulting to 'no' for option: use_logd

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1709]: info: get_config_opt: Defaulting to 'no' for option: use_mgmtd

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1709]: info: get_config_opt: Found 'off' for option: debug

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1709]: info: get_config_opt: Found 'yes' for option: to_logfile

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1709]: info: get_config_opt: Found '/var/log/cluster/corosync.log' for option: logfile

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1709]: info: get_config_opt: Found 'yes' for option: to_syslog

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1709]: info: get_config_opt: Defaulting to 'daemon' for option: syslog_facility

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1711]: info: main: Starting Pacemaker 1.1.5-5.el6 (Build: 01e86afaaa6d4a8c4836f68df80ababd6ca3902f): manpages docbook-manpages publican ncurses cman cs-quorum corosync snmp libesmtp

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1711]: info: start_child: Forked child 1715 for process stonith-ng

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1711]: info: start_child: Forked child 1716 for process cib

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1711]: info: start_child: Forked child 1717 for process lrmd

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1711]: info: start_child: Forked child 1718 for process attrd

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1711]: info: start_child: Forked child 1719 for process pengine

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1711]: info: start_child: Forked child 1720 for process crmd

We should also make sure that the process is running …

[root@proxylb01/02 ~]# ps axf | grep pacemakerd

6560 pts/0 S 0:00 pacemakerd

6564 ? Ss 0:00 \_ /usr/lib64/heartbeat/stonithd

6565 ? Ss 0:00 \_ /usr/lib64/heartbeat/cib

6566 ? Ss 0:00 \_ /usr/lib64/heartbeat/lrmd

6567 ? Ss 0:00 \_ /usr/lib64/heartbeat/attrd

6568 ? Ss 0:00 \_ /usr/lib64/heartbeat/pengine

6569 ? Ss 0:00 \_ /usr/lib64/heartbeat/crmd

and as a last check, take a look if there is any error message in the /var/log/messages with

[root@proxylb01/02 ~]# grep ERROR: /var/log/messages | grep -v unpack_resources

which should return nothing.

cluster configuration

We’ll change into the cluster configuration and administration CLI with the command crm and check the default configuration, which should look like this:

crm(live)# configure show

node proxylb01.int

node proxylb02.int

property $id="cib-bootstrap-options" \

dc-version="1.1.5-5.el6-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2"

crm(live)# bye

And if we call following:

[root@proxylb01/02 ~]# crm_verify -L

crm_verify[1770]: 2012/02/10_11:08:22 ERROR: unpack_resources: Resource start-up disabled since no STONITH resources have been defined

crm_verify[1770]: 2012/02/10_11:08:22 ERROR: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option

crm_verify[1770]: 2012/02/10_11:08:22 ERROR: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity

Errors found during check: config not valid

-V may provide more details

We see that STONITH, has not been configurated, but we don’t need it as we have no filesystem or database running which could go corrupt, so we disable it.

[root@proxylb01/02 ~]# crm configure property stonith-enabled=false

[root@proxylb01/02 ~]# crm_verify -L

Now we download the OCF script for haproxy

[root@proxylb01/02 ~]# wget -O /usr/lib/ocf/resource.d/heartbeat/haproxy http://github.com/russki/cluster-agents/raw/master/haproxy

[root@proxylb01/02 ~]# chmod 755 /usr/lib/ocf/resource.d/heartbeat/haproxy

After this we’re ready to configure the cluster with following commands:

[root@wgwlb01 ~]# crm

crm(live)# configure

crm(live)configure# primitive haproxyIP03 ocf:heartbeat:IPaddr2 params ip=10.0.0.3 cidr_netmask=32 op monitor interval=5s

crm(live)configure# group haproxyIPs haproxyIP03 meta ordered=false

crm(live)configure# property no-quorum-policy=ignore

crm(live)configure# primitive haproxyLB ocf:heartbeat:haproxy params conffile=/etc/haproxy/haproxy.cfg op monitor interval=30s

crm(live)configure# colocation haproxyWithIPs INFINITY: haproxyLB haproxyIPs

crm(live)configure# order haproxyAfterIPs mandatory: haproxyIPs haproxyLB

crm(live)configure# commit

These commands added the floating IP address to the cluster and than we created an group of IP addresses in case we later need more than one. We than defined that we need no quorum and added the haproxy to the mix and we made sure that the haproxy and its IP address is always on the same node and that the IP address is brought up before haproxy is.

Now the cluster setup is done and you should see the haproxy running on one node with crm_mon -1.

haproxy configuration

We now only need to setup haproxy, which is done by configurating following file: /etc/haproxy/haproxy.cfg

We make sure that haproxy is sending logfiles by having following in the global section

log 127.0.0.1 local2 notice

and set maxconn 8000 (or more if you need more). The defaults sections looks following in my setup:

log global

# 30 minutes of waiting for a web request is crazy,

# but some users do it, and then complain the proxy

# broke the interwebs.

timeout client 30m

timeout server 30m

# If the server doesnt respond in 4 seconds its dead

timeout connect 4s

And now the actual load balancer configuration

listen http_proxy 10.0.0.3:3128

mode tcp

balance roundrobin

server proxynode01 10.0.0.11 check

server proxynode02 10.0.0.12 check

server proxynode03 10.0.0.13 check

server proxynode04 10.0.0.14 check

If your caches have public IP addresses and are not natted to one outgoing IP address, you may wish to change the balance algorithm to source. Some web applications get confused when a client’s IP address changes between requests. Using balance source load balances clients across all web proxies, but once a client is assigned to a specific proxy, it continues to use that proxy.

And we would like to see some stats so we configure following:

listen statslb01/02 :8080 # choose different names for the 2 nodes

mode http

stats enable

stats hide-version

stats realm Haproxy\ Statistics

stats uri /

stats auth admin:xxxxxxxxx

rsyslog setup

haproxy does not write its own log files, so we need to configure rsyslog for this. We add following to the MODULES configuration in /etc/rsyslog.conf

$ModLoad imudp.so

$UDPServerRun 514

$UDPServerAddress 127.0.0.1

and following to the RULES section.

local2.* /var/log/haproxy.log

and at last we do a configuration reload for haproxy with

[root@wgwlb01 ~]# /etc/init.d/haproxy reload

After all this work, you should have a working high availability haproxy setup for your proxies. If you have any comments please don’t hesitate to write a comment!

32 Comments »

RSS feed for comments on this post. TrackBack URI

Leave a comment

Powered by WordPress

Entries and comments feeds.

Valid XHTML and CSS.

39 queries. 0.124 seconds.

Nice information..good work..

Comment by Bhaskar Chowdhury — February 13, 2012 #

[…] Howto setup a haproxy as fault tolerant / high available load balancer for multiple caching web prox… (robert.penz.name) *{margin:0; padding:0;} #socialbuttonnav li{background:none;overflow:hidden;width:65px; height:80px; line-height:30px; margin-right:2px; float:left; text-align:center;} #fb { text-align:center;border:none; } […]

Pingback by Setting Up a Rackspace Loadbalancer and New Cloud Servers - Mike Levin — March 6, 2012 #

you have a typo, you say wget -0…. haprox, and the next line has haprox as well, instead of haproxy. this one took me two hours lolz

Comment by trent — March 15, 2012 #

You’re correct. Corrected it. Thx.

Comment by robert — March 15, 2012 #

[…] Fault Tolerant Load Balancer with HAProxy Happy Discoveries of the day: […]

Pingback by Network lesson: Virtual IP and Private VLAN « Summer Peppermint — June 26, 2012 #

Nice article with good diagram! You should improve it a lot by using HTTP mode and url-based load balancing, it will significantly improve your proxy cache hit ratio by always hitting the same caches for the same objects. Combine with with “hash-type consistent” and you’ll even support adding/removing caches on the fly without affecting distribution.

Comment by Willy Tarreau — August 1, 2012 #

I’m using “balance source”, which works also nice. Are you sure that HTTP mode works as the browsers are sending an “CONNECT” and than e.g. SSL Traffic.

Comment by robert — August 1, 2012 #

Hi

I am try to duplicate your setup here but have used the following ip’s instead.

192.168.0.160 vm-proxy-01

192.168.0.170 vm-proxy-02

192.168.0.200 proxy (this is the floating ip)

The i try to configure the cluster i get:

crm(live)configure# primitive haproxyLB ocf:heartbeat:haproxy params conffile=/etc/haproxy/haproxy.cfg op monitor interval=30s

lrmadmin[28965]: 2012/08/01_10:51:06 ERROR: lrm_get_rsc_type_metadata(578): got a return code HA_FAIL from a reply message of rmetadata with function get_ret_from_msg.

ERROR: ocf:heartbeat:haproxy: could not parse meta-data:

ERROR: ocf:heartbeat:haproxy: no such resource agent

Any ideas?

Comment by Jared — August 1, 2012 #

The solution to:

crm(live)configure# primitive haproxyLB ocf:heartbeat:haproxy params conffile=/etc/haproxy/haproxy.cfg op monitor interval=30s

lrmadmin[28965]: 2012/08/01_10:51:06 ERROR: lrm_get_rsc_type_metadata(578): got a return code HA_FAIL from a reply message of rmetadata with function get_ret_from_msg.

ERROR: ocf:heartbeat:haproxy: could not parse meta-data:

ERROR: ocf:heartbeat:haproxy: no such resource agent

Was correctly setting the file permission (to 755) for the haproxy script.

-rwxr-xr-x 1 root root 5297 Aug 1 10:26 haproxy

Comment by Jared — August 1, 2012 #

is it possible to work on a config file instead of “crm configure”, because I find the syntax error prone. For example “…params conffile=/etc/haproxy/haproxy.cfg op monitor interval=30s”

How the cluster manager will decide which are params for the ‘primitive’ and which are parameters for cman – probably by the magic word “op” it starts parsing own parameters ?

found an article(scientific lol) on this topic: http://ijaest.iserp.org/archieves/12-Jn-1-15-11/Vol-No.7-Issue-No.2/7.IJAEST-Vol-No-7-Issue-No-2-Autonomic-Fault-Tolerance-using-HAProxy-in-Cloud-Environment-222-227.pdf

Comment by A.Genchev — August 7, 2012 #

If you’re using the crm CLI it does error checking. you can also edit it and than commit it (press tab tab in the configure mode) … just dont use crm directly from the bash with the commands as parameters as than there is no error checking …

Comment by robert — August 7, 2012 #

Have followed this guide and have a couple of issues.

First when I issue the crm_mon -1 command I only see one node listed not two. The same is true for issuing the configure show command. Also I am getting an error with the crm_mon -1 command.

============

Last updated: Wed Sep 12 16:27:02 2012

Last change: Wed Sep 12 14:40:16 2012 via cibadmin on proxy01

Stack: openais

Current DC: proxy01 – partition WITHOUT quorum

Version: 1.1.7-6.el6-148fccfd5985c5590cc601123c6c16e966b85d14

1 Nodes configured, 2 expected votes

2 Resources configured.

============

Online: [ proxy01 ]

Resource Group: haproxyIPs

haproxyIP03 (ocf::heartbeat:IPaddr2): Started proxy01

haproxyLB (ocf::heartbeat:haproxy): Started proxy01

Failed actions:

haproxyIP03_monitor_0 (node=proxy01, call=2, rc=-2, status=Timed Out): unknown exec error

Comment by Steve — September 12, 2012 #

1. I see only one node configured … configure the second too: “1 Nodes configured, 2 expected votes” .. you need to do the first steps on both nodes.

2. take a look into the

/usr/lib/ocf/resource.d/heartbeat/haproxy file and search for the monitor section, try the steps by hand if they work or a program is need and not installed.

Comment by robert — September 15, 2012 #

when i do this part:

crm(live)# configure show

i only get:

node localhost

instead of:

node proxylb01.int

node proxylb02.int

why is that?

Comment by Lior — October 31, 2012 #

I am following your document and I ran across an issue. when I try to start the pacemaker service is it not working.I am good up to that point. I also tried “service pacemaker start” .. no good. any tips??

I am installing pm 1.0.12-1.e15 on RHEL5

Comment by phillip — November 7, 2012 #

addition to the question 15… it would seem that the pacemaker did not install correctly.

Comment by phillip — November 8, 2012 #

addition to 15 and 16.

http://www.gossamer-threads.com/lists/linuxha/pacemaker/71761

make sure you upgrade Pacemake <= 1.1.2 so that it will work

Comment by phillip — November 8, 2012 #

Hi!

The version is quite critical … thats the reason I talked about rhel6 … they also may changes in the service packs e.g. the non-multicast feature is quite new.

Comment by robert — November 8, 2012 #

Hi! I could have sworn I’ve been to this website before but after reading through some of the post I realized it’s new to

me. Anyways, I’m definitely happy I found it and I’ll be bookmarking and checking back often!

Comment by radio ink — June 22, 2013 #

I used to be recommended this web site by my cousin.

I am no longer sure whether this post is written by him as nobody else

recognize such targeted about my problem. You are

incredible! Thank you!

Comment by qHacks.PL — July 7, 2013 #

Since there is no crm shell on rhel 6.4 anymore, would be nice to update this guide with ‘pcs’ commands:

pcs property set stonith-enabled=false

pcs property set no-quorum-policy=ignore

pcs resource create ClusterIP IPaddr2 ip=10.0.0.3 cidr_netmask=32 op monitor interval=5s

pcs resource create haproxyLB haproxy conffile=/etc/haproxy/haproxy.cfg op monitor interval=30s

pcs constraint colocation add haproxyLB ClusterIP INFINITY

pcs constraint order ClusterIP then haproxyLB

Comment by sba — August 27, 2013 #

I got this web site from my buddy who told me regarding this website and now this time I am visiting

this web page and reading very informative content here.

Comment by Jannette — October 14, 2013 #

This was very useful!

In addition to comment 21, it also helps to add iflabel=vip to the IP definition which allows you to see the VIP detail using ifconfig.

You also need to make sure the haproxy.cfg configuration has both ‘daemon’ and the ‘pidfile’ declarations in the global part of the config or your cluster will not be able to start haproxy at all due to RA script failure.

Comment by Craig — March 13, 2014 #

It would be great if you could make a video about it in youtube. It would be much easier to follow

Comment by Aziz — November 18, 2014 #

[…] Howto setup a haproxy as fault tolerant / high available load balancer for multiple caching web prox… […]

Pingback by Automate the Provisioning and Configuration of HAProxy and an Apache Web Server Cluster Using Foreman | Programmatic Ponderings — February 21, 2015 #

Do you consider update this for centos 7.

I would be GREAT 🙂

Comment by tunca — August 3, 2016 #

For Centos 7 you need to change to keepalive and I recommend letting the haproxy running on both the whole time. the current vrrp master gets the traffic as the IP is there. For this you need to configure the system to allow binding to IP address which are not currently up. To do this set net.ipv4.ip_nonlocal_bind=1 in /etc/sysctl.conf.

Hope that helps.

Comment by robert — August 3, 2016 #

Hi,

Can we do it the load balancing/high availability with url only (i.e. without using IP addresses?)

like HA FQDN: ha.abcd.local

Server 1 FQDN: s1.abcd.local

Server 2 FQDN: s2.abcd.local

Server 3 FQDN: s3.abcd.local

Comment by Max — December 12, 2016 #

Thanks for the great post. Got slightly lost half way through, but think i worked it out in the end. haven’t found a good post like this anywhere else for load balancing, so much appreciated!

Comment by Ryan — July 9, 2017 #

Great guide, thanks Robert. One follow up question – since writing this post, do you know if is there an API in haproxy to detect automatically if a a node is set up / goes down? I’d be willing to pay for it if so (as would my clients!)

Note, the research paper link shared by A. Genchev above is not working. Here it is on Research Gate (https://www.researchgate.net/publication/228921529_Autonomic_Fault_Tolerance_using_HAProxy_in_Cloud_Environment), also helpful for anyone looking to supplement Robert’s guide above.

Comment by Ludovic — May 6, 2019 #

Side note, this can be used to set up your own DNS and HAProxy based Netflix tunnel, and addresses the common ‘Netflix proxy error’ of doom – https://privacycanada.net/netflix-proxy-error/

Comment by Ludovic — May 6, 2019 #

Take a look at https://www.envoyproxy.io/ which may provides that feature or at least can be changed without restart.

Comment by robert — May 6, 2019 #