The corporate data center is undergoing a major transformation the likes of which haven't been seen since Intel-based servers started replacing mainframes decades ago. It isn't just the server platform: the entire infrastructure from top to bottom is seeing major changes as applications migrate to private and public clouds, networks get faster, and virtualization becomes the norm.

All of this means tomorrow's data center is going to look very different from today's. Processors, systems, and storage are getting better integrated, more virtualized, and more capable at making use of greater networking and Internet bandwidth. At the heart of these changes are major advances in networking. We're going to examine six specific trends driving the evolution of the next-generation data center and discover what both IT insiders and end-user departments outside of IT need to do to prepare for these changes.

Beyond 10Gb networks

Network connections are getting faster to be sure. Today it's common to find 10-gigabit Ethernet (GbE) connections to some large servers. But even 10GbE isn't fast enough for data centers that are heavily virtualized or handling large-scale streaming audio/video applications. As your population of virtual servers increases, you need faster networks to handle the higher information loads required to operate. Starting up a new virtual server might save you from buying a physical server, but it doesn't lessen the data traffic over the network—in fact, depending on how your virtualization infrastructure works, a virtual server can impact the network far more than a physical one. And as more audio and video applications are used by ordinary enterprises in common business situations, the file sizes balloon too. This results in multi-gigabyte files that can quickly fill up your pipes—even the big 10Gb internal pipes that make up your data center's LAN.

Part of coping with all this data transfer is being smarter about identifying network bottlenecks and removing them, such as immature network interface card drivers that slow down server throughput. Bad or sloppy routing paths can introduce network delays too. Typically, both bad drivers and bad routes haven't been carefully previously examined because they were sufficient to handle less demanding traffic patterns.

It doesn't help that more bandwidth can sometimes require new networking hardware. The vendors of these products are well prepared, and there are now numerous routers, switches, and network adapter cards that operate at 40- and even 100-gigabit Ethernet speeds. Plenty of vendors sell this gear: Dell's Force10 division, Mellanox, HP, Extreme Networks, and Brocade. It's nice to have product choices, but the adoption rate for 40GbE equipment is still rather small.

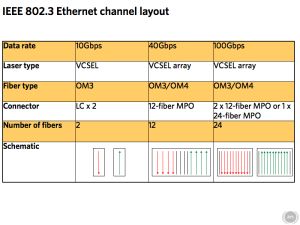

Using this fast gear is complicated by two issues. First is price: the stuff isn't cheap. Prices per 40Gb port—that is, the cost of each 40Gb port on a switch—are typically $2,500, way more than a typical 10Gb port price. Depending on the nature of your business, these higher per-port prices might be justified, but it isn't only this initial money. Most of these devices also require new kinds of wiring connectors that will make implementation of 40GbE difficult, and a smart CIO will keep total cost of ownership in mind when looking to expand beyond 10Gb.

As Ethernet has attained faster and faster speeds, the cable plan to run these faster networks has slowly evolved. The old RJ45 category 5 or 6 copper wiring and fiber connectors won't work with 40GbE. New connections using the Quad Small Form-factor Pluggable or QSFP standard will be required. Cables with QSFP connectors can't be "field terminated," meaning IT personnel or cable installers can't cut orange or aqua fiber to length and attach SC or LC heads themselves. Data centers will need to figure out their cabling lengths and pre-order custom cables that are manufactured with the connectors already attached ahead of time. This is potentially a big barrier for data centers used to working primarily with copper cables, and it also means any current investment in your fiber cabling likely won't cut it for these higher-speed networks of the future either.

Still, as IT managers get more of an understanding of QSFP, we can expect to see more 40 and 100 gigabit Ethernet network legs in the future, even if the runs are just short ones that go from one rack to another inside the data center itself. These are called "top of rack" switches. They link a central switch or set of switches over a high-speed connection to the servers in that rack with slower connections. A typical configuration for the future might be one or ten gigabit connections from individual servers within one rack to a switch within that rack, and then 40GbE uplink from that switch back to larger edge or core network switches. And as these faster networks are deployed, expect to see major upgrades in network management, firewalls, and other applications to handle the higher data throughput.

The rack as a data center microcosm

In the old days when x86 servers were first coming into the data center, you'd typically see information systems organized into a three-tier structure: desktops running the user interface or presentation software, a middle tier containing the logic and processing code, and the data tier contained inside the servers and databases. Those simple days are long gone.

Still living on from that time, though, are data centers that have separate racks, staffs, and management tools for servers, for storage, for routers, and for other networking infrastructure. That worked well when the applications were relatively separate and didn't rely on each other, but that doesn't work today when applications have more layers and are built to connect to each other (a Web server to a database server to a scheduling server to a cloud-based service, as a common example). And all of these pieces are running on virtualized machines anyway.

Today's equipment racks are becoming more "converged" and are handling storage, servers, and networking tasks all within a few inches of each other. The notion first started with blade technology, which puts all the essential elements of a computer on a single expansion card that can easily slip into a chassis. Blades have been around for many years, but the leap was using them along with the right management and virtualization software to bring up new instances of servers, storage, and networks. Packing many blade servers into a single large chassis also dramatically increases the density that was available in a single rack.

It is more than just bolting a bunch of things inside a rack: vendors selling these "data center in a rack" solutions are providing pre-engineering testing and integration services. They also have sample designs that can be used to specify the particular components easily that reduce cable clutter, and vendors are providing software to automate management. This arrangement improves throughput and makes the various components easier to manage. Several vendors offer this type of computing gear, including Dell's Active Infrastructure and IBM's PureSystems. It used to be necessary for different specialty departments within IT to configure different components here: one group for the servers, one for the networking infrastructure, one for storage, etc. That took a lot of coordination and effort. Now it can all be done coherently and with a single source.

Let's look at Dell's Active Infrastructure as an example. They claim to eliminate more than 755 of the steps needed to power on a server and connect it to your network. It comes in a rack with PowerEdge Intel servers, SAN arrays from Dell's Compellent division, and blades that can be used for input/output aggregation and high-speed network connections from Dell's Force10 division. The entire package is very energy efficient and you can deploy these systems quickly. We've seen demonstrations from IBM and Dell where a complete network cluster is brought up from a cold start within an hour, and all managed from a Web browser by a system administrator who could be sitting on the opposite side of the world.

Beyond the simple SAN

As storage area networks (SANs) proliferate, they are getting more complex. SANs now use more capable storage management tools to make them more efficient and flexible. It used to be the case that SAN administration was a very specialized discipline that required arcane skills and deep knowledge of array performance tuning. That is not the case any longer, and as SAN tool sets improve, even IT generalists can bring one online.

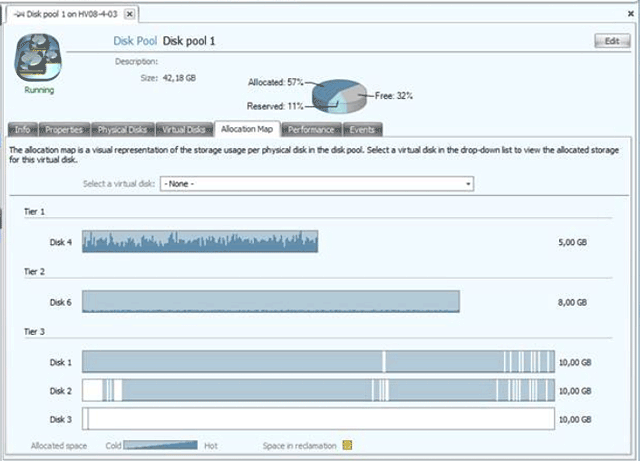

The above data center clusters from Dell and others are just one example of how SANs have been integrated into other products. Added to these efforts, there is a growing class of management tools that can help provide a "single pane of glass" view of your entire SAN infrastructure. These also can make your collection of virtual disks more efficient.

The dilemma here is you want to have enough space available to your virtual drive so that it has room to grow, so you often have to tie up space that could otherwise be used. This is where dynamic thin provisioning comes into play. Most SAN arrays have some type of thin provisioning built in and let you allocate storage without actually allocating it—a 1TB thin-provisioned volume reports itself as being 1TB in size but only actually takes up the amount of space in use by its data. In other words, a physical 1TB chunk of disk could be "thick" provisioned into a single 1TB volume or thin provisioned into maybe a dozen 1TB volumes, letting you oversubscribe the volume. Thin provisioning can play directly into your organization's storage forecasting, letting you establish maximum volume sizes early and then buying physical disk to track with the volume's growth.

Another trick many SANs can do these days is data deduplication. There are many different deduplication methods, with each vendor employing its own "secret sauce." But they all aim to reduce or eliminate the same chunks of data being stored multiple times. When employed with virtual machines, data deduplication means commonly used operating system and application files don't have to be stored in multiple virtual hard drives and can share one physical repository. Ultimately, this allows you to save on the copious space you need for these files. For example, a hundred Windows virtual machines all have essentially the same content in their "Windows" directories, their "Program Files" directories, and many other places. Deduplication ensures those common pieces of data are only stored once, freeing up tremendous amounts of space.

reader comments

52