How to deploy a fault tolerant cluster with continuous or high availability

Some companies cannot allow having their services down. In case of a server outage a cellular operator might experience billing system downtime causing lost connection for all its clients. Admittance of the potential impact of such situations leads to the idea to always have a plan B.

In this article we’re throwing light on different ways of protection against server failures, as well as architectures used for deployment of VMmanager Cloud, a control panel for building a High Availability cluster.

Preface

Terminology in the area of cluster tolerance differs from website to website. In order to avoid mixing different terms and definitions let’s outline the ones that will be used in the given article:

- Fault Tolerance (FT) is the ability of a system to continue its operation after the failure of one of its components.

- Cluster is a group of servers (cluster nodes) connected through communication channels.

- Fault Tolerant Cluster (FTC) is a cluster where the failure of one server doesn’t result in complete unavailability of the whole cluster. Functions of the failed node are automatically reassigned between the remaining nodes.

- Continuous Availability (CA) means that a user can utilize the service without experiencing any timeouts. It doesn’t matter how long it has been since the node failed.

- High Availability (HA) means that a user might experience service timeouts in case if one of the nodes goes down; however, the system will be recovered automatically with minimum downtime.

- CA cluster is a Continuous Availability cluster.

- HA cluster is a High Availability cluster.

Let it be required to deploy a cluster consisting of 10 nodes with virtual machines running on each node. The goal is to protect virtual machines after the server failure. Dual CPU servers are used to maximize calculating density of racks.

At first blush, the most attractive option for a company is to deploy a Continuous Availability cluster when a service is still provisioned after the equipment has failed. Indeed, Continuous Availability is a must if you need to maintain operation of a billing system or automate a continuous production process. However, this approach also has its traps and pitfalls which are covered below.

Continuous Availability

Continuity of a service is only feasible if an exact copy of a physical or virtual machine with this service is built, which is available at any given time. Such redundancy model is called 2N. Creating a copy of the server after the equipment had failed would take time, causing service timeout. Furthermore in this case, it would not be possible to retrieve the RAM dump from the failed server, which means that all the information contained there would be gone.

There are two methods used for providing CA: on a hardware and a software layer. Let’s focus on each of them in greater detail.

The hardware method represents a double server where all components are duplicated and calculations are executed simultaneously and independently. Synchronization is achieved by using a dedicated node that checks the results coming from both parts. If the node detects any discrepancy, it tries to define the problem and fix errors. If the error cannot be fixed, the system switches off the failed module.

Stratus, a manufacturer of CA servers, guarantees that the overall downtime of the system doesn’t exceed 32 seconds per year. Such results can be achieved by using the special equipment. According to Stratus representatives, the cost of one CA server with dual CPUs for each synchronized module is around $160 000 depending on specifications. The extended price for the whole CA cluster in this case would be $1 600 000.

The software method

The most popular software tool for deployment of a Continuous Availability cluster at the time of the article is VMware vSphere. Continuous Availability technology of this product is called Fault Tolerance.

Unlike the hardware method, this technology has certain requirements, such as the following:

- CPU on the physical host:

- Intel with Sandy Bridge architecture (or newer). Avoton is not supported.

- AMD Bulldozer (or newer).

- Machines with Fault Tolerance are to be connected to one 10 Gb network with low latency. VMware highly recommends using a dedicated network.

- Not more than 4 virtual CPUs per VM.

- Not more than 8 virtual CPUs per physical host.

- Not more than 4 virtual machines per physical host.

- Virtual machine snapshots are unavailable.

- Storage vMotion is unavailable.

The full list of limitations and incompatibilities can be found in official documentation.

vSphere licensing is based on physical CPUs. The price starts at $1750 per license + $550 for annual subscription and support. Cluster management automation also requires VMware vCenter Server that costs over $8000. The 2N model is used to provide Continuous Availability, hence it is required to purchase 10 replicated servers with licenses for each of them in order to build a cluster with 10 nodes with virtual machines.

The overall cost of software would be 2[Number of CPUs per server]*(10[Number of nodes with virtual machines]+10[Number of replicated nodes])*(1750+550)[Licence cost per each CPU]+8000[VMware vCenter Server cost]=$100 000. All prices are rounded off.

Specific node configurations are not described in this article as server components always differ depending on the purpose of the cluster. Network equipment is also not described since it should be identical in every case. This article focuses on those components that would definitely vary, which is the license cost.

It is also important to mention the products that are no longer developed and supported.

The product called Remus is based on Xen virtualization. It is a free open source solution which utilizes the micro snapshot technology. Unfortunately its documentation hasn’t been updated for a long time: The installation guide provides instructions for Ubuntu 12.10 which end of life was announced in 2014. Even Google search didn’t find any company that was using Remus for their operations.

Attempts were made to modify QEMU to build Continuous Availability clusters on this technology. There are two projects that announced their work in this direction.

The first one is Kemari, an open source product lead by Yoshiaki Tamura. This project intended to use live QEMU migration. The last commit was made in February 2011, which suggests that the development reached a deadlock and will not be continued.

The second product is Micro Checkpointing, an open source project founded by Michael Hines. No activity has been found in its changelog over the last year, which resembles the Kemari project.

These facts allow us make the conclusion that there is simply no possibility for Continuous Availability on KVM virtualization to this date.

Despite all the advantages of Continuous Availability systems there are many impediments on the way to deploy and operate such solutions. Nevertheless, in some cases Fault Tolerance might required but without the necessity to be continuously available. Such scenarios allow using clusters with High Availability.

High Availability

A High Availability cluster provides Fault Tolerance by automatically detecting if hardware is down and subsequently launching the service on the available node.

High Availability doesn’t support synchronization of CPUs launched on nodes and doesn’t always allow to sync local disks. With this in mind, it is recommended to locate drives used by nodes in a separate independent storage such as the network storage.

The reason is clear: The node cannot be reached after its failure, and information from its storage device cannot be retrieved. Data storage system also should be fault tolerant, otherwise there is no possibility for High Availability. As a result, the High Availability cluster consists of two sub-clusters:

- Computing cluster consisting of nodes with virtual machines

- Storage cluster with disks that are used by computing nodes.

At the moment there are the following solutions used to implement High Availability clusters with virtual machines on cluster nodes:

- Heartbeat, version 1.? with DRBD;

- Pacemaker;

- VMware vSphere;

- Proxmox VE;

- XenServer;

- OpenStack;

- oVirt;

- Red Hat Enterprise Virtualization;

- Windows Server Failover Clustering with Hyper-V server role;

- VMmanager Cloud.

Let’s have a closer look at VMmanager Cloud.

VMmanager Cloud

VMmanager Cloud is a product, which allows to deploy High Availability clusters and uses QEMU-KVM virtualization. This technology was selected because it is actively developed and supported and allows to install any operating system on a virtual machine. The product uses Corosync to detect availability of the cluster. If one of the servers is down, VMmanager distributes its virtual machines between the remaining nodes one by one.

In the simplified form this mechanism works as follows:

- The system identifies the cluster node with the lowest number of virtual machines.

- It checks whether there is enough RAM to locate the machine.

- If there is enough memory on a node for the pertinent machine, VMmanager creates a new virtual machine on this node.

- If there is not enough memory, the system checks the other nodes with more virtual machines.

Testing a few hardware configurations and inquiry of many current VMmanager Cloud users identified that normally it takes 45-90 seconds to distribute and restore operation of all VMs from the failed node, depending on equipment performance.

It is recommended to dedicate one or a few nodes as a safeguard against emergency situations and not to deploy VMs on these nodes during routine operation. It minimizes the chances of lacking resources on the live cluster nodes for adding virtual machines from the failed node. In case if only one backup node is used, such security model is called N+1.

VMmanager Cloud supports the following storage types: file system, LVM, Network LVM, iSCSI and Ceph [in particular RBD (RADOS Block Device), one of Ceph implementations]. The latter three are used for High Availability.

One lifetime license for ten operational nodes and one backup node cost €3520, or $3865 to this date (one license costs €320 per node irrespectively of CPU number). The license includes one year of free updates; starting from the second year updates are provided per subscription model at the price of €880 per year for the whole cluster.

Let’s check how VMmanager Cloud has already been used for deployment of High Availability clusters.

FirstByte

FirstByte started providing cloud hosting in February 2016. Initially their cluster was built on OpenStack; however the lack of specialists for this system in terms of both their availability and cost impelled them to look for an alternative solution. The new system for building a High Availability cluster was to meet the following requirements:

- Ability to deploy KVM virtual machines.

- Integration with Ceph.

- Integration with a billing system for offering the existing services.

- Affordable license cost.

- Support from the software developer.

VMmanager Cloud fit all the requirements.

Distinctive features of FirstByte cluster:

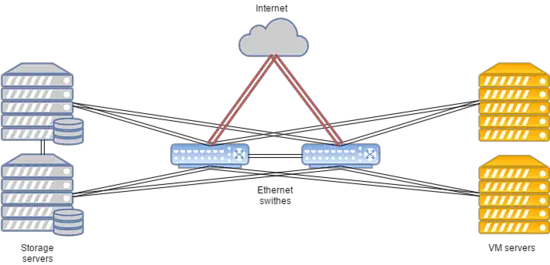

- Data transfer is based on Ethernet technology and Cisco equipment.

- Routing is performed by using Cisco ASR9001. The cluster uses around 50000 IPv6 addresses.

- Link speed between computing nodes and switches is at 10 Gbps.

- Data transfer speed between switches and storage nodes is 20 Gbps, with two combined channels at 10 Gbps each.

- A separate 20 Gbps link is used between racks with storage nodes for replication.

- SAS disks in combination with SSDs are installed on all storage nodes.

- Storage type is RBD.

The system layout is presented below:

Such configuration works for hosting popular websites, game servers and databases with load above the average.

FirstVDS

FirstVDS provides the services of fault tolerant cluster which was started in September 2015.

VMmanager Cloud was chosen for this cluster due to the following factors:

- Solid experience of using ISPsystem control panels.

- Integration with BILLmanager per default.

- High quality of technical support.

- Integration with Ceph.

Their cluster has the following features:

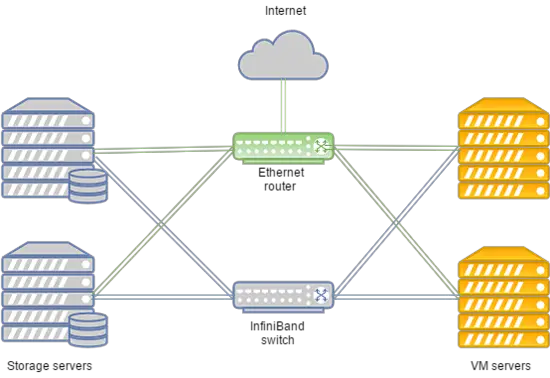

- Data transfer is based on Infiniband network with connection speed at 56 Gbps;

- Infiniband network is built on Mellanox equipment;

- Storage nodes have SSD drives;

- Storage type is RBD.

The system can be laid out in the following way:

In case of Infiniband network failure the connection between VM disk storage and computing servers is established over Ethernet network deployed on Juniper equipment. The new connection is set up automatically.

Due to the high speed of communication with the storage this cluster works perfectly for hosting websites with ultrahigh traffic, video and content streaming, as well as big data.

Conclusion

Let’s sum up the key findings of the article.

The Continuous Availability cluster is a must when every second of downtime brings substantial losses. If it is allowed to have an outage of 5 minutes while virtual machines are being deployed on a backup node, the High Availability cluster can be a good option reducing hardware and software costs.

It is also important to remind that the only way to achieve Fault Tolerance is excessiveness. Make sure to replicate your servers, data communication equipment and links, Internet access channels and power. Replicate everything you can. Such measures make it possible to eliminate bottlenecks and potential points of failure which can cause downtime of the whole system. By taking the above measures you can be sure that you have a fault tolerant cluster resistant to failures.

If you think that the High Availability model fits your requirements and VMmanager Cloud is a good tool to realize it, please refer to the installation manual and documentation to learn more about the system. I wish you failure-free and continuous operations!