Thus far, we've discussed the importance of setting goals to guide the metrics process, avoiding vanity metrics, and outlined the general types of metrics that are useful for studying your community. With a solid set of goals in place, we are now ready to discuss some of the technical details of gathering and analyzing your community metrics that align with those goals.

The tools you use and the way in which you collect metrics depend heavily on the processes you have in place for your community. Think about all of the ways in which your community members interact with each other and where collaboration happens. Where is code being committed? Where are discussions happening? More importantly than the where, what is the how? Do you have documented processes for community members to contribute? If you have a solid understanding of what your community is doing and how it is doing it, you'll be much more successful at extracting meaningful data to support your goals.

Getting started

Developing a solid metrics program can be a daunting task, but as an open source community developer, you probably have access to basic metrics data already. In the Liferay community, we were using GitHub for source code and documentation management, Pootle for translations, JIRA for issue tracking, SourceForge for binary downloads hosting, and Liferay itself for social collaboration (forums, wikis, blogs, etc.) This meant that we could access some basic metrics using each tool's built-in mechanisms:

- GitHub: GitHub API

- JIRA: JQL accessed remotely using the JIRA REST API

- Liferay: Liferay JSON Web Services API

- SourceForge: Allura REST API

$ curl https://api.github.com/repos/opensourceway/open-org-field-guide

{

"id": 64841869,

"name": "open-org-field-guide",

"full_name": "opensourceway/open-org-field-guide",

"owner": {...}

"size": 6258,

"stargazers_count": 8,

"forks": 2,

"open_issues": 0,

"watchers": 8,

"subscribers_count": 2

}

By developing some simple command line clients for each of these APIs, you have immediate access to all sorts of 1st-order metrics like the number of downloads, information on registered users, an informed forum activity, the number of commits and stars, and many others. If you are using some of these tools in your community, you should take a look at the available built-in metrics, in some cases directly through the tool's user interface (for example, GitHub's project Pulse pages).

Combining data

Although 1st-order metrics are interesting from a vanity perspective, we were after more useful 2nd-order metrics, which meant we first needed to consider how to combine data from multiple queries across different sources. For example, one of the critical metrics we identified was time-to-commit, which is the average time taken from the moment a community member files a bug in JIRA to the time the bug was committed in GitHub. There are several issues to be aware of when accessing data across system boundaries, including:

- Duplicate records. Not every system is good at de-duping their records. In some cases, it is a bug, but in others it may be part of the application's workflow. For example, you might find one record of a forum post that is in "draft" state, and another record representing its final, "published" state. You should watch out for any unusual counts that don't seem accurate.

- Spam records. These are notoriously difficult to filter after the fact, so ideally these are filtered before they are allowed to persist in the system of record. If you run into a lot of this, consider using tools like SpamAssassin or Akismet to filter automatically based on content of the records, before analysis.

- Cross-platform identity. Some developers use different identities across systems. They may use their "professional" identity when responding on the project forum and then use their "personal" identity when committing code or presenting at conferences. Combining data across these systems requires that you attempt to normalize identities if your metrics involve personal or corporate attribution. Look for features in the platforms you use that allow community members to make this link explicit. For example, Liferay community members can specify their Twitter handles, allowing us to track activity on Twitter and link it to their Liferay presence. While convenient, it's not 100% foolproof (not everyone wants to link accounts in this way.) The usual fallback is to pick some uniquely identifying piece of information, such as email address, and manually maintain an alias list, which maps multiple identities to a single identity for the purposes of metrics gathering.

In addition to personal identity, you should consider the metrics you want to produce and implement workflow changes that make the job easier. For example, at the time we were interested in time-to-commit metrics, we had no way to associate an issue in JIRA with a GitHub commit, so we added a field in JIRA that got populated by developers when they closed an issue in JIRA, and contained a reference to the Git commit. In this way, we essentially instrumented our community tools with the necessary data to be able to gather and analyze metrics.

Generating your metrics

With a somewhat "clean" source of raw metrics from the systems that your community uses to do its work, and any necessary links between systems you may need, you can start to generate the metrics you've identified as being relevant to your metrics goals. Diversity of community means there's no single Tool To Rule Them All, so you'll need to experiment to find the best set of tools that works for you and your community.

Brute force

As a developer with limited time, I often chose the DIY approach for certain metrics. It is easy to get started and you don't have to be a data scientist to get valuable metrics that can help achieve your goals. For example, consider the forum. It is a record of posts by people, sometimes with extra metadata about the nature of the posts (e.g., tags, categories, time of day, thread information, etc.). Regardless of the mechanics of the forum (perhaps it is a mailing list, or a web-based forum) you usually have access to a database of records that each contain:

● date and time

● category (Installation/Setup, Using, Developing, Troubleshooting, etc.)

● identity of the user (name, email, account ID, etc.)

● which thread it is a part of, where it lies within the thread hierarchy

● nature of the post (is it a question, or an accepted answer)

● views, edits

● content of the post

With this raw data, it is easy to construct a query, or write a small program in your favorite language to calculate all sorts of interesting metrics besides the obvious "counter" type metrics. Things like:

● average time a thread goes from initial post to initial response or to accepted answer

● combining post data with location data from the user's identity to determine geographic "hot spots" in the community

● how many posts are being ignored over time

All of these lined up with our chosen metrics in the Liferay community and were easy to generate periodically using several bash scripts and SQL queries. However, as we continued to refine our goals and add more metrics, this didn't scale very well, especially when we wanted to do more cross-system metrics.

Advanced metrics tools

Producing metrics yourself can be a very rewarding challenge, as you'll learn a lot about your community and the nature of the data available to you, which is super important when you're ready to take it to the next level. There are a number of open source metrics tools that you can use that will do a lot of the heavy lifting for you, allowing you to focus on the result instead of the mechanics of producing the data.

Example visualization of Grimore Labs data from the Liferay community

If your community uses mailing lists, MLSstats is a great way to parse them and load them into a database for further processing. (For example, using Gource; see Dawn Foster's excellent article on Gource visualizations.)

Visone is a visualization tool build to analyze social networks and the dependencies and links between elements in the network. In addition to the obvious (analyzing your community's social fabric), you can also use it to visualize code. In the Liferay community, we used this tool to measure how developers moved around the codebase, observing and reporting on activity within modules over time.

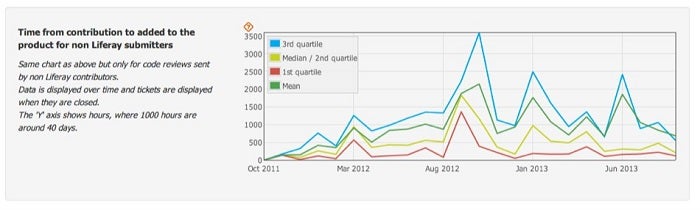

Grimoire Lab is a collection of 100% open source software analytics tools. It includes tooling to capture data across systems, de-duplicate, normalize identities, and visualize the result. We partnered with Bitergia and used these tools to for more advanced metrics, such as tracking our community's diversity (geographic and demographic), and several "X over time" metrics that combined data from our forums, GitHub, JIRA, etc.

This is a small sampling of the open source tools we used in the Liferay community, but there are others out there that may fit your needs better. If you find them, be sure to let our readers know about them in the comments.

With a solid set of goals and the ability to generate metrics for tracking them, you’re well on your way to a healthier open source community. In the next article we’ll show you how to analyze the metrics themselves to get a deeper understanding of what they can (and cannot) tell you. See you next month.

Comments are closed.