“It’s all 1s and 0s.” People say this when they’re making a joke or a sarcastic remark. When it comes to computers thought, it’s really true. And at the hardware level, that’s all there is. The processor, the memory, various forms of storage, USB, HDMI, and network connections, along with everything else in that cell-phone, tablet, laptop, or desktop only uses 1s and 0s. Bytes provide for the grouping of the 1s and 0s. So they are a big help in keeping them organized. Let’s looks at how they do that.

Bytes are the unit of measure for data and programs stored and used in your computer. Though the byte has existed for a long time in computer history and has taken several forms, it’s current 8 bit length is well settled. Taken either singly or as adjacent groups, bytes are the generally accepted most common way the Bits in a computer are kept organized.

So what’s a bit? A bit is a binary digit; that is it can have only two values. In computers the two values a bit can have are zero (0) and one (1). That’s it, no other choices. A byte is just eight binary bits that are taken together to represent binary numbers. Through various coding schemes the numbers can represent a wide variety of other things like the characters we write with.

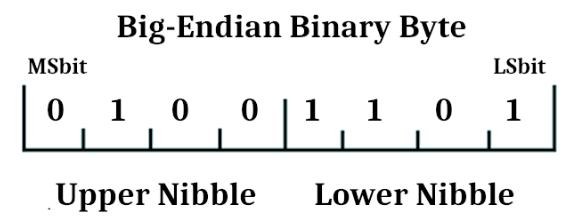

The table below shows a single Big-Endian byte showing individual bits of this byte and their associated powers of two. All Bytes of data are in Big-Endian format. There are other bytes, such as program code where Endian formatting does not apply. The decimal values of each power of two is show with each bit for reference. Imagine a line between Bit 3 and Bit 4 is where the byte is sub divided into four bit groups called Nibbles. Little-Endian is a very commonly used byte format. Stay tuned for more on Endians. If you’re curious about the name, do a search on (etymology of endian).

One Big-Endian Byte:

| Bit0 | Bit1 | Bit2 | Bit3 | Bit4 | Bit5 | Bit6 | Bit7 | |

| Power of 2 | 27 | 26 | 25 | 24 | 23 | 22 | 21 | 21 |

| Decimal value | 128 | 64 | 32 | 16 | 8 | 4 | 2 | 1 |

Each nibble of a byte can hold a four bit binary number as shown in the following table. If a bit is set to “1” that power of two adds to the value of the nibble. If a bit is set to “0” that power of two does not add to the value of the nibble. A byte which is two nibbles can hold a two digit hexadecimal number. Bits are really all that a computer can use. Programmers and engineers developing computer hardware use hexadecimal to make dealing with the bits easier. In the table below the most significant bit is on the left 20, 21, 22, 23

One Big-Endian Nibble:

| Binary Number | Hexidecimal Value |

| 0000 | 0 |

| 0001 | 1 |

| 0010 | 2 |

| 0011 | 3 |

| 0100 | 4 |

| 0101 | 5 |

| 0110 | 6 |

| 0111 | 7 |

| 1000 | 8 |

| 1001 | 9 |

| 1010 | A |

| 1011 | B |

| 1100 | C |

| 1101 | D |

| 1110 | E |

| 1111 | F |

I’ll explain Big-Endian starting with a one byte diagram. The longer lines at the end of this frame are the boundaries of the byte so if you were drawing a group of adjacent bytes it would be clear where one byte left off and another began. The small lines divide the frame into individual locations where each of the eight bits can be shown. The medium line in the middle divides the byte into two equal four bit pieces which are the nibbles. Nibbles also have a long and varied history. I’ve never seen that they have been standardized. However the current well settled view is that nibbles are groups of four bits as I have shown them below. All of these lines only exist as people draw bytes. The lines don’t exist in the computer.

The Upper Nibble and Lower Nibble are labels as they would be used in a Big-Endian byte. In Big-Endian, the most significant digit is on the left end of a number. So the Lower Nibble is the least significant half of the number in the byte. Likewise the least significant bit is on the right LSBit (usually noted as LSB) stands for Least Significant Bit. And the most significant bit is on the left. The Upper Nibble on the left is the most significant half of the number. MSBit (usually noted as MSB) is the most significant bit. This is the same as to how we write decimal numbers with the most significant digit on the left. This is called Big-Endian because the “big end” of the number comes first.

With the byte being able to hold two hexadecimal digits, a byte can hold hexadecimal numbers between 00 and FF (0 to 255 in decimal) So if you are using bytes to represent the characters of a human readable language you just give each character, punctuation mark, etc. a number. (Then of course get everyone to agree with the coding you invented.) This is only one use for bytes. Bytes are also used as program code that your computer runs, numbers for various data you might have, and everything else that inhabits a computer in the CPU, memory, storage, or zooming around on the various buses and interface ports.

As it turns out there are two commonly used byte formats. Little-Endian has been used in the prior examples. Its feature is having the most significant digit on the left and the least significant digit on the right.

There is also a format called Little-Endian. As you might expect it is opposite of Big-Endian with the least significant digit on the left and the most significant digit on the right. This is the opposite of how we write decimal numbers. Little-Endian is not used for the order of bits in a byte, but it is used for the order of bytes in a larger structure. For instance: A large number contained in a Little-Endian Word of two bytes would have the least significant byte on the left. If the two byte number was in Big-Endian, most significant byte would be on the right. Little-Endian is only used in the context of long multi-byte numbers to set the significance order of the bytes in the larger data structure.

There are reasons for using both Big and Little byte ordering and the meaty reasons are beyond the scope of this article. However, Little-Endian tends to be used in microprocessors. The x86-64 processors in most PCs use the Little-Endian byte format. Though the later generations do have special instructions that provide limited use of Big-Endian format. The Big-Endian byte format is widely used in networking and notably in those big Z computers. Now you’re not necessarily limited to one or the other. The newer ARM processors can use either Endian format. Devices like microprocessors that can use both Big-Endian and Little Endian byte ordering are sometimes referred to as Bi-Endian.

Well, sometimes you really need more than one byte to hold a number. To that end there are longer formats available that are composed of multiple bytes. For instance: The x86-64 processors Have Words which are 16 bits or 2 bytes that happen to be lined up next to each other head to tail, so to speak. They also have Double Words (32 bits or 4 bytes), and Quad Words (64 bits or 8 bytes). Now these are just examples of data forms made available by the processor hardware.

Programmers working with languages have many more ways to organize the bits and bytes. When the program is ready, a compiler or another mechanism converts the way that the program has bits and bytes organized into data forms that the CPU hardware can deal with.

OppaErich

Nope! The hardware does not know about numbers, the hardware doesn’t know anything at all. It’s voltage or little to none voltage used at hardware level.

/nerd-mode off

Pat Kelly

You’re right. Though some folks would say that charge is the measure to determine 1 or 0. Then things get complicated by the fact that PCs and laptops aren’t the only computers. Computers are almost literally everywhere and there are thousands of different digital chips that get used in them. The people who invented and designed those different kinds of chips had their own ideas of how to best represent the 1s and 0s for the chip(s) they worked on. The result is that the voltage levels and charges that represent the 1s and 0s vary from chip to chip. Some are even opposites. The thing they have in common is they use their voltages and charges to represent 1s and 0s. I decided to stay at the 1s and 0s level so it would be common. Oh and just for fun, though they are a very small minority of computers, there are some computers that do not use binary numbers. I find the most interesting non-binary computers to be Analog Computers. They aren’t used much lately. They solve complex integral and differential equation sets using analog (continuously variable) voltages.

Mr-miky

In my opinion the tables are misleading, in a decimal representation 1234, 4 has the smallest weight and is on the right.

Similarly in 1110, 0 has the lowest weight, so in my opinion “little endian” has the least significant bit on the right.

Jason Moore

I enjoyed this article, thanks for the hard work on presenting it!

Tran Van Nua

Uediid

Szymon

I am relay sorry, but there are some misconceptions in your article.

A far as i am concerned, endianness is related to BYTES order in MEMORY, not BITS order in BYTES. Byte is a representation of number described by bits, but limited to 8 digits. We call it binary representation, because the number used as a base to describe it is 2 – just as you have shown it in your article. However, there are other system which can be used. In general, people are most familiar with decimal system – with number 10 used a base. We don’t write the numbers backwards, don’t we? Why should we do it then when it comes to bits? If you don’t believe me, please try simple example of left and right shift operators of value ‘2’. You will see that left shift will always produce ‘4’ and right shift ‘1’. Simple math, yet very effective 🙂

But wait, there is more! Let’s use a 16-bit long integer (or if you would like to check it in assembly, you can use at least 16-bit wide register) on little-endian organized architecture. If we do left shift on value ‘0xFF’ you will obtain… ‘0x01FE’! This is because, as you write your code you should work on numbers, not a bit representations (you are still allowed thou, but you relay need to know what you are doing).

My understanding is, that ‘problem’ with endianness can be commonly observed in one case – storing data represented by words (multiple bytes – number depends on architecture) in memory. In almost every case we are able to address each byte of memory. If we would like to store 4-bytes long number, we will be able to access it as individual bytes and this can be potentially harmful if we don’t know our architecture. In some cases, file format that we are preparing (especially in graphics) will require byte ordering that is not matching our architecture – then we must be aware how we are reading/storing values.

To be fair, there are also some cases, where BITS are representing BYTES in reversed order. This is however used in communication protocols and it is specification dependent. You can also give your own meaning to the bytes (for instance ASCII) but in terms of numeric interpretation you are rather limited to capabilities of your CPU. Of course, it is possible to do implementation of custom operators based on provided instruction set, probably it would be even possible to implement some arithmetic operations for your number representation. However, it is not how it is done on most of the units available on market and it would be really more of an academic dispute.

Also, when it comes to processors, it relay doesn’t matter for us (and i guess for them too) what is order of bits in ‘physical’ byte. I don’t want to go into details, but the only concern are the connections to which the logic states are propagated to.

I hope this will clear some things up. Have a great time exploring low-level world!

Cheers!

Pat Kelly

Endian applies to to bits in a byte. Endian also applies to longer structures Words Doubles etc. when the structure is taken as a whole.

If someone breaks down a larger structure into bytes and somehow reinterprets the number in the larger structure they can certainly get erroneous results. They must know the endian and preserve the the byte order to maintain the integrity of the number stored in the larger structure.

Say someone is receiving bytes from somewhere that are segments of a multi-byte large number. First one must be aware of the endian of the bytes being received and the order of the bytes being received. Then the endian of the bytes must be preserved when reconstructing the larger number and the byte order must be maintained to preserve the endian and value of the larger number.

People who write software dealing directly with the bytes and longer structures must understand the data they are dealing with. Particularly for this issue they must know the endian , byte order, and the length of the of the data items so they can save it and retrieve it in ways that do not cause errors in the data.

Frank

Szymon is right – endian-ness refers to byte order only and has nothing to do with bits. Bits are numbered from right-to-left. Bit 0 is the rightmost and the smallest ; bit 7 is leftmost and largest – always. So most of the info in the article is just wrong.

Pat Kelly

Thanks for being the one who finally brought me back to reality. I know bytes are always big-endian. Even the non-processor digital chips I use in my circuit designs have bytes set up that way. I think I just got caught up in wanting to show little-endian because most folks have never seen such a thing.

Dave

Thanks Frank! I read the article and thought “Whoops! I REALLY don’t think so!” da

Pat Kelly

I believe it’s fixed now.