As enterprises increase their use of artificial intelligence (AI), machine learning (ML), and deep learning (DL), a critical question arises: How can they scale and industrialize ML development? These conversations often focus on the ML model; however, this is only one step along the way to a complete solution. To achieve in-production application and scale, model development must include a repeatable process that accounts for the critical activities that precede and follow development, including getting the model into a public-facing deployment.

This article demonstrates how to deploy, scale, and manage a deep learning model that serves up image recognition predictions using Kubermatic Kubernetes Platform.

Kubermatic Kubernetes Platform is a production-grade, open source Kubernetes cluster-management tool that offers flexibility and automation to integrate with ML/DL workflows with full cluster lifecycle management.

Get started

This example deploys a deep learning model for image recognition. It uses the CIFAR-10 dataset that consists of 60,000 32x32 color images in 10 classes with the Gluon library in Apache MXNet and NVIDIA GPUs to accelerate the workload. If you want to use a pre-trained model on the CIFAR-10 dataset, check out the getting started guide.

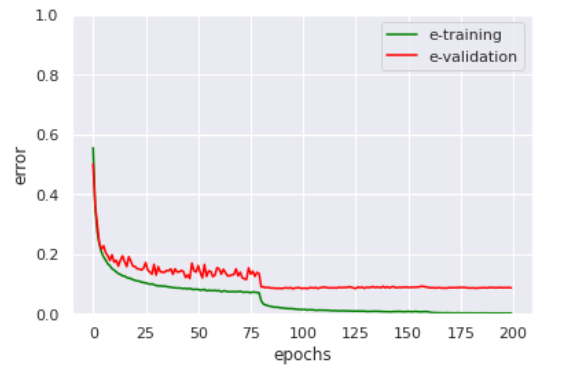

The model was trained over a span of 200 epochs, as long as the validation error kept decreasing slowly without causing the model to overfit. This plot shows the training process:

(Chaimaa Zyami, CC BY-SA 4.0)

After training, it's essential to save the model's parameters so they can be loaded later:

file_name = "net.params"

net.save_parameters(file_name)Once the model is ready, wrap your prediction code in a Flask server. This allows the server to accept an image as an argument to its request and return the model's prediction in the response:

from gluoncv.model_zoo import get_model

import matplotlib.pyplot as plt

from mxnet import gluon, nd, image

from mxnet.gluon.data.vision import transforms

from gluoncv import utils

from PIL import Image

import io

import flask

app = flask.Flask(__name__)

@app.route("/predict",methods=["POST"])

def predict():

if flask.request.method == "POST":

if flask.request.files.get("img"):

img = Image.open(io.BytesIO(flask.request.files["img"].read()))

transform_fn = transforms.Compose([

transforms.Resize(32),

transforms.CenterCrop(32),

transforms.ToTensor(),

transforms.Normalize([0.4914, 0.4822, 0.4465], [0.2023, 0.1994, 0.2010])])

img = transform_fn(nd.array(img))

net = get_model('cifar_resnet20_v1', classes=10)

net.load_parameters('net.params')

pred = net(img.expand_dims(axis=0))

class_names = ['airplane', 'automobile', 'bird', 'cat', 'deer',

'dog', 'frog', 'horse', 'ship', 'truck']

ind = nd.argmax(pred, axis=1).astype('int')

prediction = 'The input picture is classified as [%s], with probability %.3f.'%

(class_names[ind.asscalar()], nd.softmax(pred)[0][ind].asscalar())

return prediction

if __name__ == '__main__':

app.run(host='0.0.0.0')Containerize the model

Before you can deploy your model to Kubernetes, you need to install Docker and create a container image with your model.

- Download, install, and start Docker:

sudo yum install -y yum-utils device-mapper-persistent-data lvm2 sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo sudo yum install docker-ce sudo systemctl start docker - Create a directory where you can organize your code and dependencies:

mkdir kubermatic-dl cd kubermatic-dl - Create a

requirements.txtfile to contain the packages the code needs to run:

flask gluoncv matplotlib mxnet requests Pillow - Create the Dockerfile that Docker will read to build and run the model:

FROM python:3.6 WORKDIR /app COPY requirements.txt /app RUN pip install -r ./requirements.txt COPY app.py /app CMD ["python", "app.py"]~This Dockerfile can be broken down into three steps. First, it creates the Dockerfile and instructs Docker to download a base image of Python 3. Next, it asks Docker to use the Python package manager

pipto install the packages inrequirements.txt. Finally, it tells Docker to run your script viapython app.py. - Build the Docker container:

sudo docker build -t kubermatic-dl:latest .This instructs Docker to build a container for the code in your current working directory,

kubermatic-dl. - Check that your container is working by running it on your local machine:

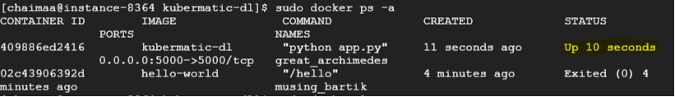

sudo docker run -d -p 5000:5000 kubermatic-dl - Check the status of your container by running

sudo docker ps -a:

(Chaimaa Zyami, CC BY-SA 4.0)

Upload the model to Docker Hub

Before you can deploy the model on Kubernetes, it must be publicly available. Do that by adding it to Docker Hub. (You will need to create a Docker Hub account if you don't have one.)

- Log into your Docker Hub account:

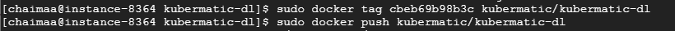

sudo docker login - Tag the image so you can refer to it for versioning when you upload it to Docker Hub:

sudo docker tag <your-image-id> <your-docker-hub-name>/<your-app-name> sudo docker push <your-docker-hub-name>/<your-app-name>

(Chaimaa Zyami, CC BY-SA 4.0)

- Check your image ID by running

sudo docker images.

Deploy the model to a Kubernetes cluster

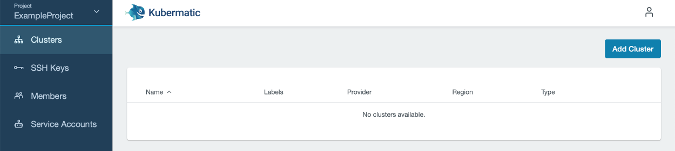

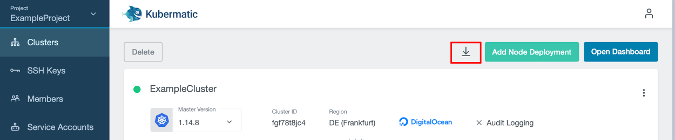

- Create a project on the Kubermatic Kubernetes Platform, then create a Kubernetes cluster using the quick start tutorial.

(Chaimaa Zyami, CC BY-SA 4.0)

- Download the

kubeconfigused to configure access to your cluster, change it into the download directory, and export it into your environment:

(Chaimaa Zyami, CC BY-SA 4.0)

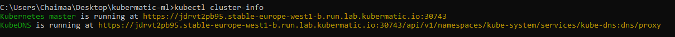

- Using

kubectl, check the cluster information, such as the services thatkube-systemstarts on your cluster:

kubectl cluster-info

(Chaimaa Zyami, CC BY-SA 4.0)

- To run the container in the cluster, you need to create a deployment (

deployment.yaml) and apply it to the cluster:

apiVersion: apps/v1 kind: Deployment metadata: name: kubermatic-dl-deployment spec: selector: matchLabels: app: kubermatic-dl replicas: 3 template: metadata: labels: app: kubermatic-dl spec: containers: - name: kubermatic-dl image: kubermatic00/kubermatic-dl:latest imagePullPolicy: Always ports: - containerPort: 8080kubectl apply -f deployment.yaml - To expose your deployment to the outside world, you need a service object that will create an externally reachable IP for your container:

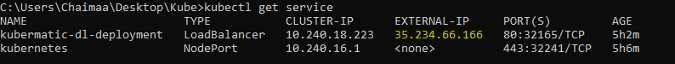

kubectl expose deployment kubermatic-dl-deployment --type=LoadBalancer --port 80 --target-port 5000 - You're almost there! Check your services to determine the status of your deployment and get the IP address to call your image recognition API:

kubectl get service

(Chaimaa Zyami, CC BY-SA 4.0)

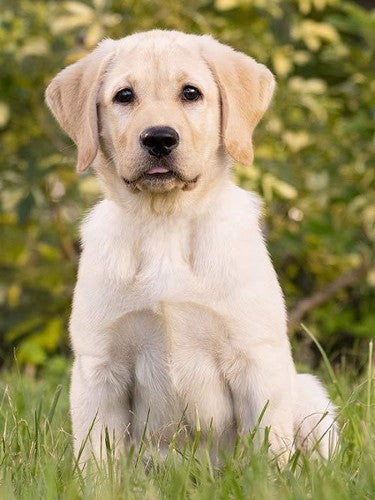

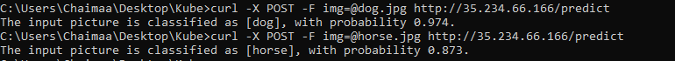

- Test your API with these two images using the external IP:

(Chaimaa Zyami, CC BY-SA 4.0)

(Chaimaa Zyami, CC BY-SA 4.0)

(Chaimaa Zyami, CC BY-SA 4.0)

Summary

In this tutorial, you created a deep learning model to be served as a REST API using Flask. It put the application inside a Docker container, uploaded the container to Docker Hub, and deployed it with Kubernetes. Then, with just a few commands, Kubermatic Kubernetes Platform deployed the app and exposed it to the world.

Comments are closed.