A speech-to-text (STT) system, or sometimes called automatic speech recognition (ASR) is as its name implies: A way of transforming spoken words via sound into textual data that can be used later for any purpose.

A text-to-speech (TTS) system, on the contrary, is a method to generate audio from textual data and files. You basically give it the text, and it generates the corresponding speech audio for it.

Both technologies are extremely useful.

They can be used for a lot of applications such as the automation of transcription, writing articles using sound only or creating audiobooks, enabling a complicated analysis of information using the generated textual files… and a lot of other things.

In the past, proprietary software and libraries dominated speech-to-text and text-to-speech technologies. Open source speech recognition alternatives didn’t exist or existed with extreme limitations and no community around.

This is changing, today there are a lot of open source speech tools and libraries that you can use right now.

They even boomed much more than before, thanks to the trend of AI and generative models.

Table of Contents: [show]

What is a Speech Library?

It is the software engine responsible for transforming voice to text or vice versa, and It is not meant to be used by end users.

Developers will first have to adopt these libraries and use them to create computer programs that can enable speech recognition for users.

Some of them come with preloaded and trained datasets to recognize the given voices in one language and generate the corresponding texts, while others just give the engine without the dataset, and developers will have to build the training models themselves.

This can be a complex task, similar to asking someone to do my online homework for me or any other, as it requires a deep understanding of machine learning and data handling.

You can think of them as the underlying engines of speech recognition programs.

If you are an ordinary user looking for speech recognition or audio generation for text, then none of these will be suitable for you, as they are meant for development use only.

What is an Open Source STT/TTS Library?

The difference between proprietary speech recognition and open source speech recognition is that the library used to process the voices should be licensed under one of the known open source licenses, such as GPL, MIT and others.

Microsoft, NVIDIA and IBM for example have their own speech recognition toolkits that they offer for developers, but they are not open source: Simply because they are not licensed under one of the open source licenses in the market.

Check the license of the open source speech-to-text library you are interested in, and if it is an open-source license as identified by OSI, then it is an open source library.

What are the Benefits of Using Open Source STT/TTS Software?

Mainly, you get few or no restrictions at all on the commercial usage for your application, as the open source speech libraries will allow you to use them for whatever use case you may need.

Also, most – if not all – open source speech toolkits in the market are free of charge, saving you tons of money instead of using proprietary ones.

So instead of using proprietary speech services and paying for each minute of voice you convert to text, or paying a recurring monthly subscription, you can use the open source alternatives without limits or anyone’s permission.

Top Open Source STT/TTS Systems

In this article we’ll see a couple of these speech transformation systems, what are their pros and cons and when they can be used.

Some of these open source libraries can be used for STT, and some of them can only be used for TTS. Others can be used for both, and we will mention the capabilities of each one so that you can easily choose.

We made sure to select only the top working, still-maintained and useful software that belong in this list for our readers. You can review our criteria for listicle articles on FOSS Post to understand the basis for our selections. Remember that we only cover open-source software on FOSS Post that follow the OSI definition and an OSI-approved license. The ranking is random and does not reflect our rating for the software.

1. Kaldi

Kaldi is an open source speech recognition (STT) software written in C++, and is released under the Apache public license.

It works on Windows, macOS and Linux. Its development started back in 2009.

Kaldi’s main feature over some other speech recognition software is that it’s extendable and modular: The community provides tons of 3rd-party modules that you can use for your tasks.

Kaldi also supports deep neural networks, and offers excellent documentation on its website. While the code is mainly written in C++, it’s “wrapped” by Bash and Python scripts.

So if you are looking just for the basic usage of converting speech to text, then you’ll find it easy to accomplish that via either Python or Bash. You may also wish to check Kaldi Active Grammar, which is a Python pre-built engine with English-trained models already ready for usage.

Learn more about Kaldi speech recognition from its official website.

2. Julius

Probably one of the oldest speech recognition (STT) software ever, as its development started in 1991 at the University of Kyoto, and then its ownership was transferred to as an independent project in 2005.

A lot of open source applications use it as their engine (Think of KDE Simon).

Julius’ main features include its ability to perform real-time STT processes, low memory usage (Less than 64MB for 20000 words), ability to produce N-best/Word-graph output, ability to work as a server unit and a lot more.

This software was mainly built for academic and research purposes. It is written in C, and works on Linux, Windows, macOS and even Android (on smartphones).

Currently, it supports both English and Japanese languages only.

The software is probably available to install easily using your Linux distribution’s repository; Just search for julius package in your package manager.

You can access Julius source code from GitHub.

3. Flashlight ASR (Formerly Wav2Letter++)

If you are looking for something modern, then this one can be included.

Flashlight ASR is an open source speech recognition software that was released by Facebook’s AI Research Team. The code is a C++ code released under the MIT license.

Facebook described its library as “the fastest state-of-the-art speech recognition system available” up to 2018.

The concepts on which this tool is built make it optimized for performance by default.

Facebook’s machine learning library Flashlight is used as the underlying core of Flashlight ASR. The software requires that you first build a training model for the language you desire before becoming able to run the speech recognition process.

No pre-built support for any language (including English) is available. It’s just a machine-learning-driven tool to convert speech to text. So you will have to train and build your own models.

You can learn more about it from the following link.

4. PaddleSpeech (Formerly DeepSpeech2)

Researchers at the Chinese giant Baidu are also working on their own speech recognition and text-to-speech toolkit, called PaddleSpeech.

The speech toolkit is built on the PaddlePaddle deep learning framework, and provides many features such as:

- Speech-to-Text and speech recognition (ASR) support.

- Text-to-Speech support.

- State-of-the-art performance in audio transcription, it even won the NAACL2022 Best Demo Award,

- Support for many large language models (LLMs), mainly for English and Chinese languages.

The engine can be trained on any model and for any language you desire.

PaddleSpeech‘s source code is written in Python, so it should be easy for you to get familiar with it if that’s the language you use.

5. Vosk

One of the newest open source speech recognition systems, as its development just started in 2020.

Unlike other systems in this list, Vosk is quite ready to use after installation, as it supports +20 languages (English, German, French, Turkish…) with portable pre-trained models already available for users.

Vosk offers small models (around 100 MB in size) that are suitable for general tasks and lightweight devices, and larger models (up to 1.5 GB in size) for better performance and results.

It also works on Raspberry Pi, iOS and Android devices, and provides a streaming API that allows you to connect to it to do your speech recognition tasks online.

Vosk has bindings for Java, Python, JavaScript, C# and NodeJS.

Learn more about Vosk from its official website.

6. Athena

An end-to-end speech recognition engine that implements ASR.

Written in Python and licensed under the Apache 2.0 license. Supports unsupervised pre-training and multi-GPUs training either on same or multiple machines. Built on the top of TensorFlow.

Has a large model available for both English and Chinese languages.

Visit Athena source code.

7. ESPnet

Written in Python on the top of PyTorch, ESPnet can be used for both speech recognition (ASR/TTS) and speech-to-text (STT) tasks.

It follows the Kaldi style for data processing, so it would be easier to migrate from it to ESPnet.

The main marketing point for ESPnet is the state-of-art performance it gives in many benchmarks, and its support for other language processing tasks such as machine translation (MT) and speech translation (ST).

The library is licensed under the Apache 2.0 license.

You can access ESPnet from the following link.

8. Whisper

One of the newest speech recognition toolkits in the family.

It was developed by the famous OpenAI company (the same company behind ChatGPT).

The main marketing point for Whisper is that it does not specialize in a set of training datasets for specific languages only; instead, it can be used with any suitable model and for any language.

It was trained on 680 thousand hours of audio files, one-third of which were non-English datasets.

It supports speech-to-text, text-to-speech, and speech translation. The company claims that its toolkit has 50% fewer errors in the output compared to other toolkits in the market.

Learn more about Whisper from its official website.

9. StyleTTS2

Also one of the newest libraries on this list, as it was just released in the middle of November 2023.

StyleTTS

It employs diffusion techniques with large speech language models (SLMs) training in order to achieve more advanced results than the previous generation of models.

The makers of the model published it along with a research paper, where they make the following claim about their work:

This work achieves the first human-level TTS synthesis on both single and multispeaker datasets, showcasing the potential of style diffusion and adversarial training with large SLMs.

It is written in Python, and has some Jupyter notebooks shipped with it to demonstrate how to use it. The model is licensed under the MIT license.

There is an online demo where you can see different benchmarks of the model: https://styletts2.github.io/

10. Coqui TTS

Coqui TTS is a deep learning toolkit designed for Text-to-Speech (TTS) generation, implemented primarily in Python. It is licensed under the MPL 2.0 license.

The software leverages several advanced libraries and frameworks such as PyTorch to facilitate high-performance model training and inference. Notably, Coqui TTS supports multiple architectures including Tacotron2, Glow-TTS, FastSpeech variants, and various vocoder models like MelGAN and WaveRNN.

This modular design allows users not only to utilize pre-trained models available in many languages but also offers tools for fine-tuning existing models or developing new ones tailored to specific needs.

The main features of Coqui TTS include efficient multi-speaker support that enables the synthesis of voices from different speakers using shared datasets while maintaining distinct vocal characteristics.

It also has capabilities such as voice cloning through YourTTS integration and real-time streaming with low latency (<200ms), making it suitable both for academic research applications as well as production environments requiring scalable solutions.

https://github.com/coqui-ai/TTS

11. GPT-SoVITS

GPT-SoVITS is an innovative software tool designed for few-shot voice conversion and text-to-speech (TTS) applications, primarily developed using Python. Licensed under the MIT license.

One of its main features is that only one minute of vocal samples is needed for effective model fine-tuning. The platform supports zero-shot capabilities that allow immediate speech synthesis from a five-second audio sample while also offering cross-lingual support in languages like English, Japanese, and Chinese.

In other words, building training models for this library would be much easier than other ones.

Additionally, it provides functionalities for enhanced emotional control over generated speech and allows customization through various pre-trained models available.

https://github.com/RVC-Boss/GPT-SoVITS

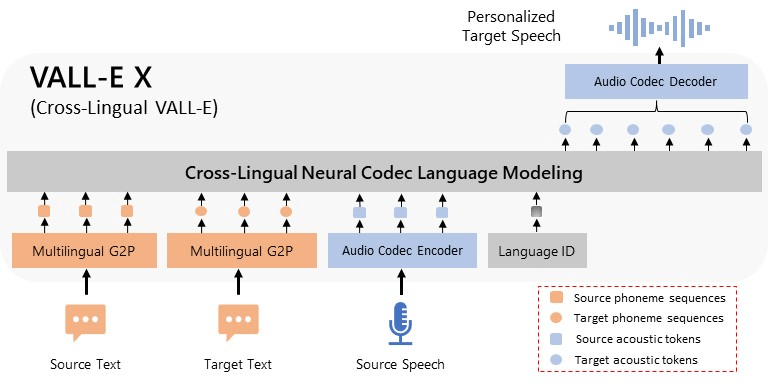

12. VALL-E X

VALL-E X is an open-source implementation of Microsoft’s VALL-E X zero-shot text-to-speech (TTS) model, primarily developed in Python and licensed under the MIT license.

The library allows cloning voices with just a short audio sample while maintaining high-quality speech synthesis across multiple languages including English, Chinese, and Japanese.

It also has advanced functionalities like emotion control during speech generation and accent manipulation when synthesizing different language prompts.

Users can also experiment with voice cloning by providing minimal recordings alongside transcripts or allowing the system’s integrated Whisper model to generate transcriptions automatically from input audio files.

VALL-E X is very close to the state-of-the-art performance in its category.

https://github.com/Plachtaa/VALL-E-X

13. Amphion

Amphion is an open-source toolkit designed for audio, music, and speech generation.

Licensed under the MIT license, it is primarily developed in Python with supporting components written in Jupyter Notebook and Shell scripting.

The software leverages various other model structures from other libraries such as FastSpeech2, VITS, VALL-E, NaturalSpeech2 for text-to-speech (TTS) tasks.

One of Amphion’s standout features is that it offers visualizations that can help users understand how it is currently working while doing TTS and audio generation tasks, which makes it a very good software for educational and academic purposes.

Additionally, it comes with a large dataset called “Emilia” that contains more than 100,000 hours of speech recordings that can be used for training models in 6 languages including English.

https://github.com/open-mmlab/Amphion

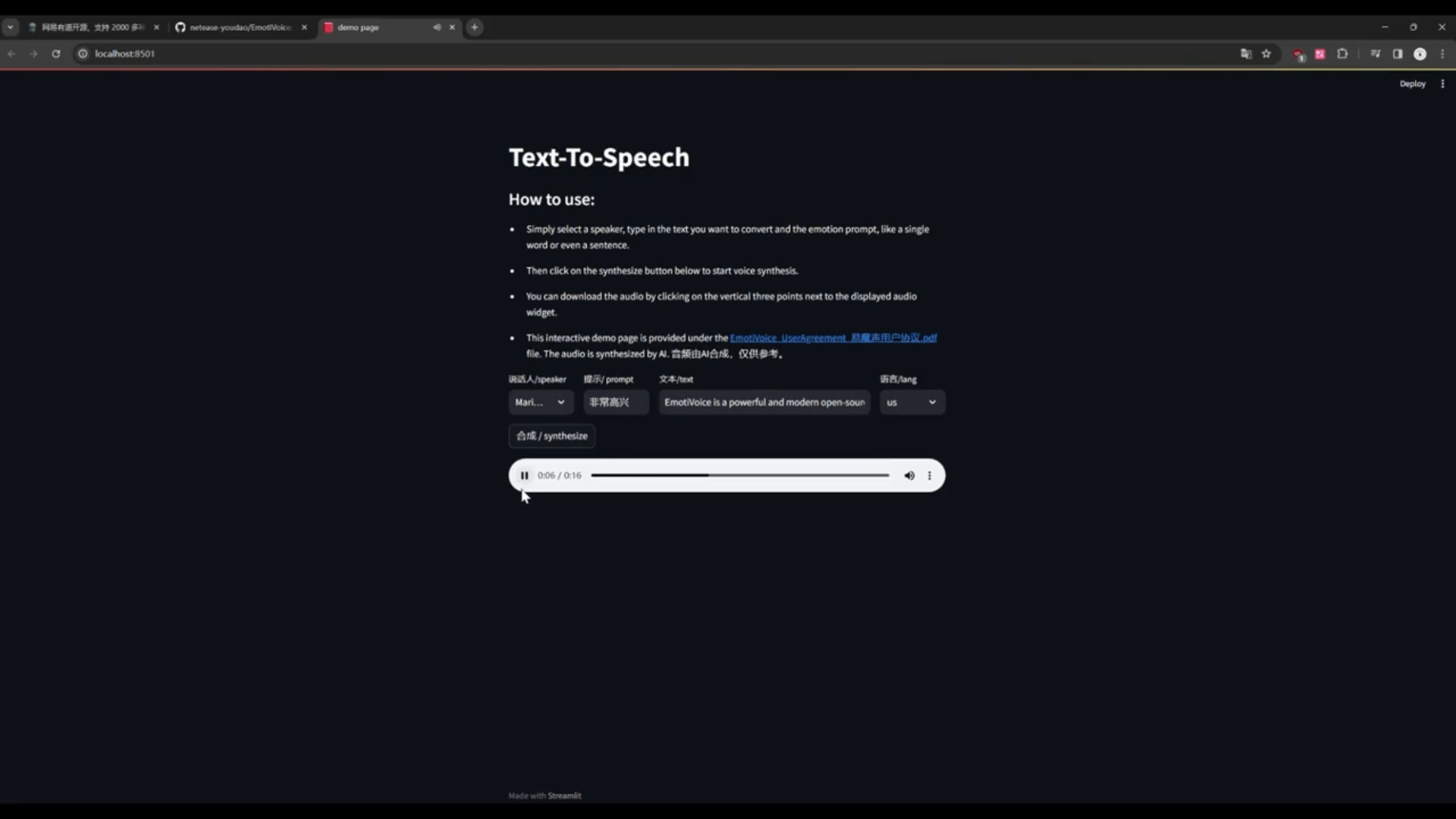

14. EmotiVoice

EmotiVoice is an open-source text-to-speech (TTS) engine primarily developed in Python, utilizing libraries such as PyTorch and various audio processing tools. It is licensed under the Apache 2.0 license.

It supports both English and Chinese languages while offering over 2000 unique voices for users to choose from.

The software supports emotional synthesis, allowing the generation of speech that conveys a wide range of emotions like happiness, sadness, anger, and excitement. This functionality enhances user engagement by providing more expressive voice outputs compared to traditional TTS systems.

Unlike most software in our list, this one also includes a user-friendly web interface that can be used to run and manage the model:

It also ships with scripting capabilities suitable for batch-processing tasks.

https://github.com/netease-youdao/EmotiVoice

15. Piper

Piper is a fast, local neural text-to-speech (TTS) system designed for embedded devices such as Raspberry Pi.

The software is primarily written in C++ and is licensed under the MIT license, but it can also be called as a Python library with pip.

Piper supports various voice models trained with VITS technology, enabling it to produce high-quality speech synthesis across multiple languages including English, Spanish, French, German among others.

You can listen to its sample demos in all supported languages from the following URL: https://rhasspy.github.io/piper-samples/

One of the standout features of Piper is its ability to stream audio output in real-time while synthesizing speech from input text. Additionally, users can customize their output clips by selecting different speakers when utilizing multi-speaker models via specific commands during runtime.

Piper is suitable to be used as a home assistant installed on any Raspberry Pi device, so this should be treated as its main advantage. Other models in this article, for example, may not have such an ability.

https://github.com/rhasspy/piper

What is the Best Open Source Speech Recognition System?

If you are building a small application that you want to be portable everywhere, then Vosk or Piper are your best options, as they are compatible with Python and support a lot of languages, and can work on devices with low resources such as the Rasberry Pi.

It also provides both large and small models that fit your needs.

If, however, you want to train and build your own models for much more complex tasks, then any of PaddleSpeech, Whisper, GPT-SoVITS, Emotivoice and VALL-E X should be more than enough for your needs, as they are the most modern state-of-the-art toolkits.

Traditionally, Julius and Kaldi are also very much cited in the academic literature, but they are “boring” and don’t have the luxury and new features like other libraries.

So pick up the one that best fits your own needs and requirements.

Frequently Asked Questions (FAQ’s)

Here are some frequent questions that we get asked about this article along with their answers:

Why did you not mention the DeepSpeech project by Mozilla?

DeepSpeech by Mozilla was abandoned many years ago and it is no longer under active development.

We recommend using other open-source models on this page that are still maintained.

Why did you remove OpenSeq2Seq from your list?

Just like DeepSpeech by Mozilla, OpenSeq2Seq from NVIDIA is no longer under active development and was abandoned many years ago.

Try using other models in our list.

Some other speech models are not mentioned in your article

Please review the listicle criteria mentioned earlier to understand why we made our choices. Ultimately, we may have missed a few of them, but all of those mentioned are the top ones indeed in the market at the time of writing this article.

You are always welcome to leave us a comment about an addition that you think should be made to this article.

How about you compare the performance of these models?

That could be nice for a research paper project or a PhD thesis.

However, this is only a small listicle article to help you get started with voice and text recognition, and can not handle the weight of such a project.

Setting up these models and trying them with real data may take a lot of time, and it’s up to you as a developer to choose the best one that fits your needs.

Conclusion

The speech recognition and TTS category is starting to become mainly driven by open source technologies, a situation that seemed to be very far-fetched a few years ago.

The current open source speech recognition software are very modern and bleeding-edge, and one can use them to fulfill any purpose instead of depending on Microsoft’s or IBM’s toolkits.

If you have any other recommendations for this list, or comments in general, we’d love to hear them below.

Leave a Reply