Wikipedia is one of the most frequently visited websites in the world. The vast online encyclopedia, editable by anyone, has become the go-to source for general information on any subject. However, the "crowdsourcing" used by Wikipedia opens their doors to spin and whitewashing–edits that may be less than factual in nature. To help journalists, citizens, and activists track these edits, TWG (The Working Group) partnered with Metro News and the Center for Investigative Reporting to build WikiWash.

WikiWash is an open source tool allows anybody with a web browser to observe the history of a Wikipedia page and to see edits happen in real-time. Journalists havealready been using WikiWash to dig into the history of controversial Wikipedia pages.

Building WikiWash, a user-friendly app built on top of Wikipedia's massive dataset, involved overcoming a number of technical challenges. Below, we've detailed our solutions to some of these challenges that others building Wikipedia-based software may find helpful.

To facilitate open source contributions to the project, WikiWash has been built in Javascript, one of the most accessible and flexible programming languages popular today. Some of the web's most popular new technologies were also used, including Node.js, Express.js, Angular.js and Socket.IO.

Tapping into Wikipedia's database

To fetch data about the history of a Wikipedia page, WikiWash relies on Wikipedia's API. Wikipedia provides access to nearly all of the data in its massive database through its API, allowing developers to build applications based on the data. Unfortunately, not all of this data is available quickly. A lot of the data we need from Wikipedia, like lists of the usernames and modification times tied to each revision, are available near-instantly. However, the actual contents of each revision of each Wikipedia page are quite slow to generate. To understand why this is, we need to first look at how Wikipedia works.

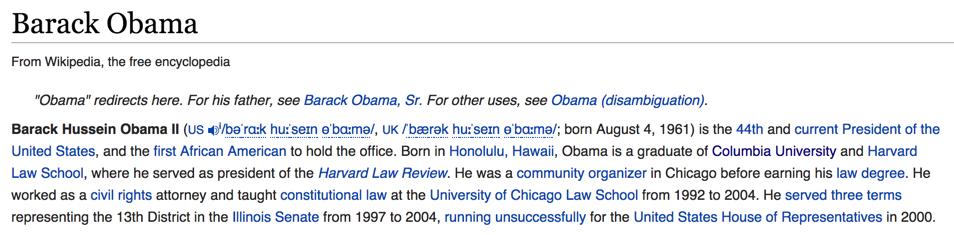

Let's look at one of Wikipedia's most-accessed pages, the page for current U.S. President Barack Obama. The opening paragraph of this page is a human-readable block of text, links, and symbols:

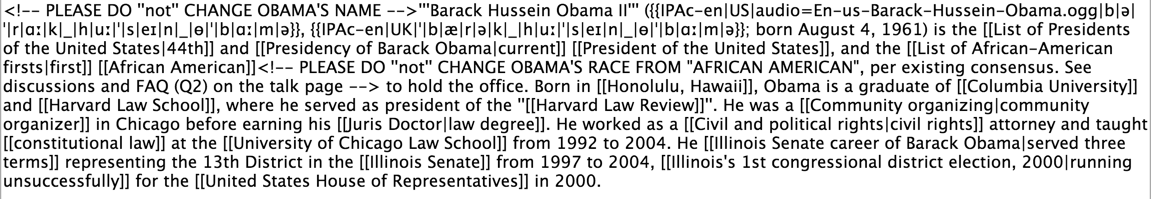

However, Wikipedia stores this page (and every page in its database) in a format called "wiki markup" or "wikitext." The corresponding intro paragraph above looks like this in Wikitext:

To prepare every page for a user to view, Wikipedia must first convert the page from the Wikitext stored in its database into HTML, the language used by web browsers. This operation is highly demanding, for a number of reasons:

- Wikitext allows the use of dynamic templates in any page, allowing the inclusion of other small pages within a page. For instance, the "infobox" that appears on the right side of many Wikipedia pages is one of those templates. Each template must be fetched separately (as it too is a page that can be modified) and customized with the information given to it for the specific page it will be placed in. (The Barack Obama page referenced earlier contains 591 templates at the time of this writing.)

- Every link in the page must be checked by Wikipedia to verify that the link is not broken, and to fetch the name of the page being linked to. (The Barack Obama page contains 758 links to other pages.)

- References and citations must be computed, linked to, and properly formatted at the bottom of the page. For many pages, this is a non-issue, but many well-written pages have hundreds upon hundreds of revisions. (The Barack Obama example has 464 citations.)

Given the amount of work that goes into generating each Wikipedia page, how is Wikipedia so fast in the first place? The answer is simple: Wikipedia makes extensive use of caching. Every page, after editing, has its most-recent revision generated and saved. Every time someone visits the page in the future, Wikipedia no longer needs to convert the page into HTML, but can instead serve the most recent version from the cache.

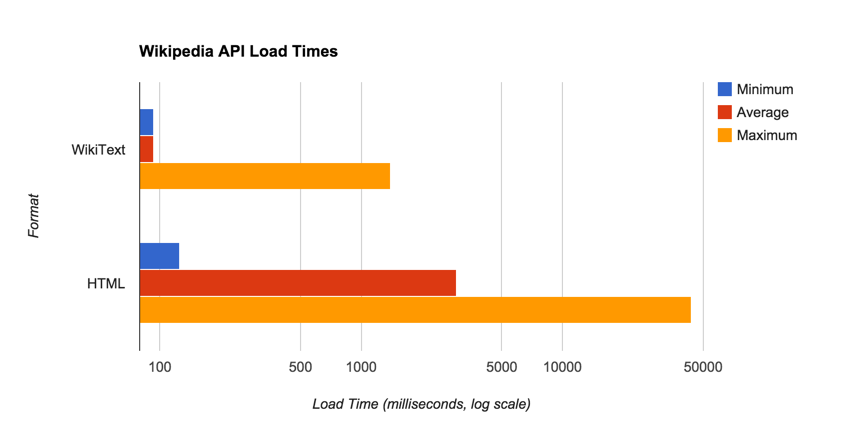

WikiWash, on the other hand, has no such luxury. Each time someone uses WikiWash to view the history of a page, Wikipedia must convert each version of the page to HTML, as Wikipedia only stores cached copies of the most recent version of each page. Unfortunately, this adds many seconds to the load time for each revision.

Load times for both WikiText and HTML versions of a random sample of revisions on Wikipedia. Note how the act of rendering a page to HTML takes 30x longer than simply fetching the WikiText version of the page.

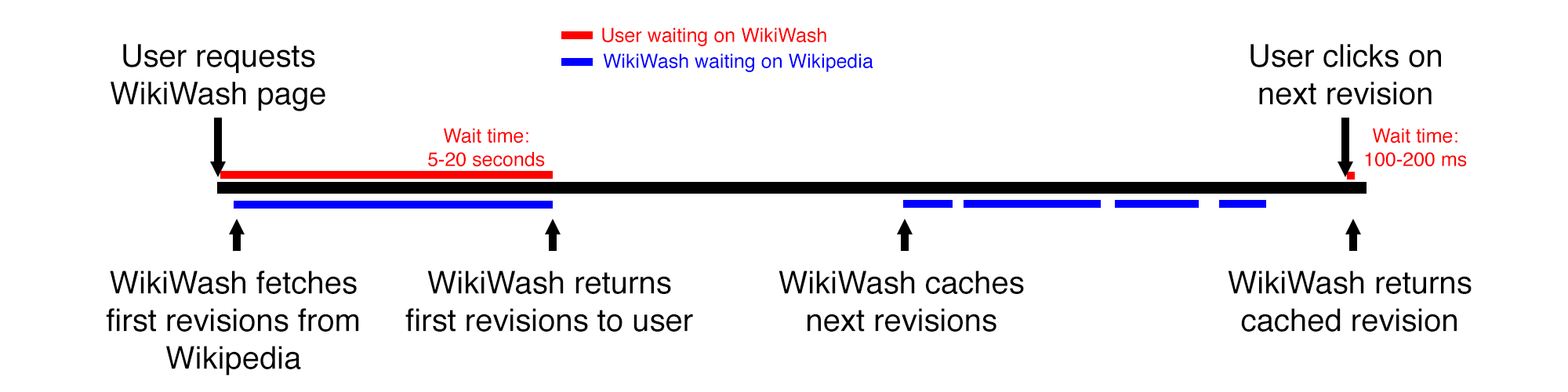

To mitigate this issue in WikiWash, we've implemented a strategy called preemptive caching to make revisions load faster. When a user loads a page in WikiWash, we start by requesting the page's most recent revision and second most recent from Wikipedia immediately. This initial load could take anywhere from three to 30 seconds, depending on the size of the page.

Once reading the first revision of the page, we noticed that most users tend to click on the next revision in the list only seconds later. To make this second click experience much snappier, WikiWash preemptively loads each revision in the list from Wikipedia. By the time a user has clicked on a revision, WikiWash will likely have cached the revision they've clicked on already. (We've also paid attention to Wikipedia's API usage guidelines to avoid unnecessary load on their servers, while ensuring a smooth user experience.)

This caching solution isn't foolproof, as users must still wait multiple seconds for the first page to load. However, in the average case, this strategy makes WikiWash appear much more responsive. Luckily, all of the cached data is mostly idempotent. With the exception of included templates, the content within a revision of a Wikipedia page never changes. This means that we can cache each revision indefinitely to speed up page loads for all future visitors to a certain revision – and indeed, WikiWash does just that.

Showing changes in a page's history

As WikiWash is a history browser of sorts for Wikipedia, its main purpose is to show differences between revisions of pages. To do so, it uses a complex algorithm called Myer's Diff Algorithm to determine what text has been added or removed from a page. Rather than implement the algorithm from scratch, WikiWash uses a Javascript port of the algorithm implemented by Neil Fraser at Google.

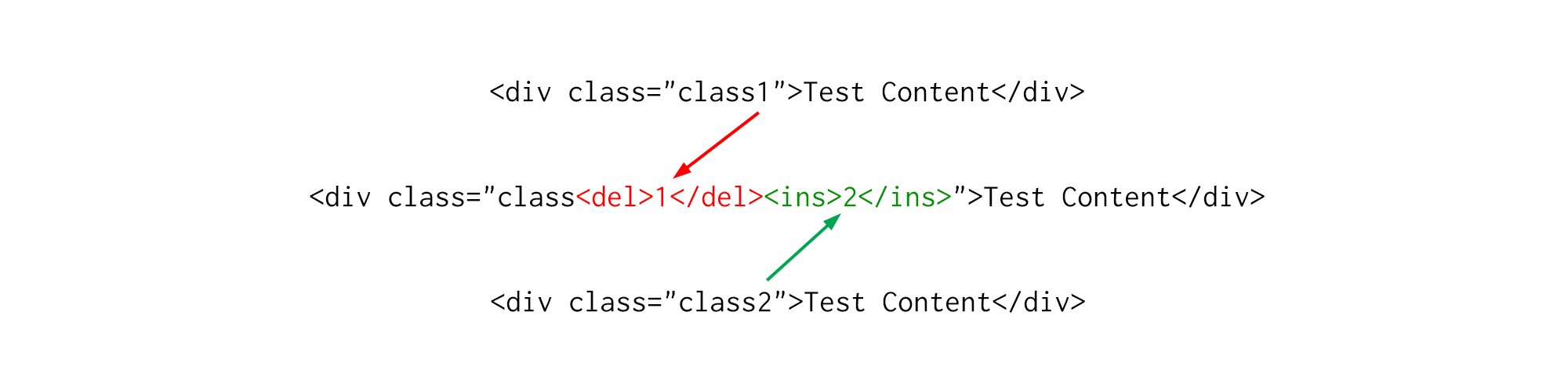

This algorithm works wonderfully for showing the differences between multiple plain text documents, but its results are unhelpful when given complex structured content like the HTML that we receive from Wikipedia. WikiWash inserts HTML tags to show differences in content – but if a difference occurs within an existing HTML tag, our naive process would insert HTML tags within other HTML tags, resulting in invalid HTML:

When inserting HTML tags to indicate differences in HTML, the resulting document can be invalid. Here, deleted text is shown in red surrounded by <del> tags, while inserted text is shown in green surrounded by <ins> tags.

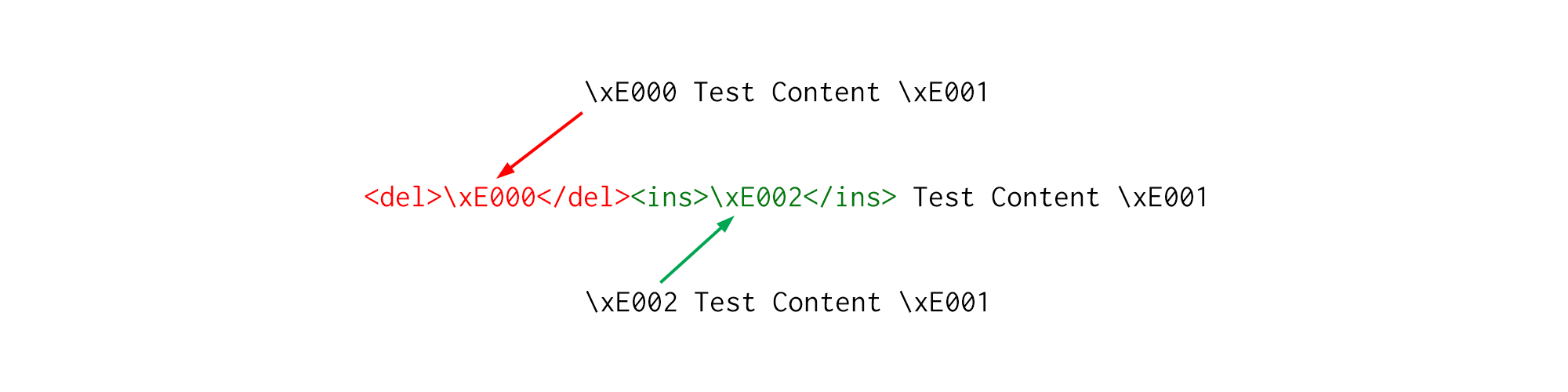

To circumvent this issue, WikiWash processes the HTML before taking its diff, then processes the output of the diff. Before running the algorithm to determine differences, WikiWash replaces all HTML tags with single Unicode characters – characters from the Unicode Private Use Area that are guaranteed not to exist already in Wikipedia articles. This works due to the fact that the diff algorithm finds differences by looking at specific characters. By replacing each tag with a unique character, we force each difference detected by the algorithm to be "all-or-nothing" – either the tag has changed entirely, or it has not changed at all.

Replacing HTML tags with unique Unicode characters, here represented by labels like "\xE000," prevents the diff algorithm from altering the insides of tags, which prevents WikiWash from generating invalid HTML.

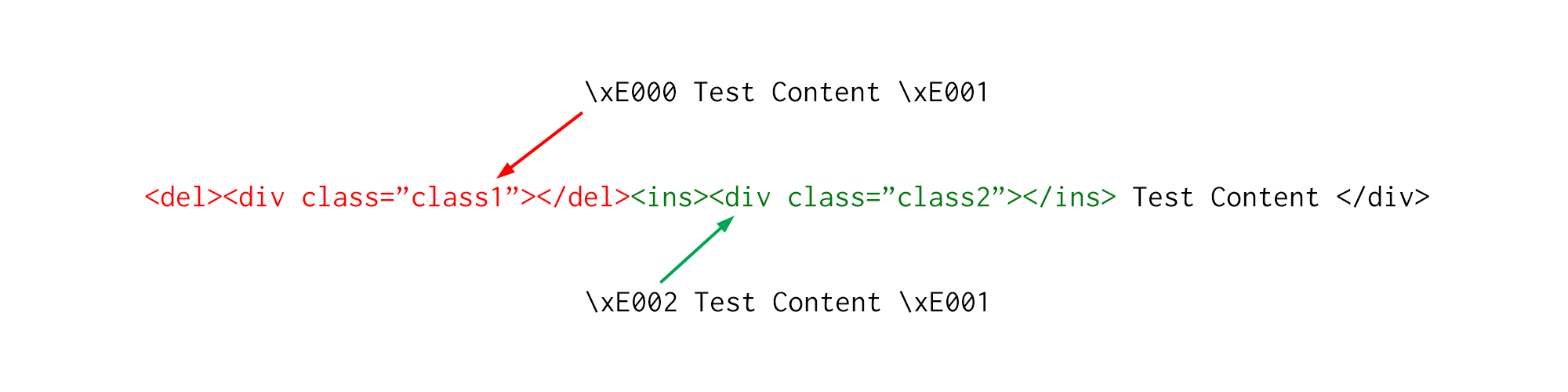

After running the diff algorithm on the resulting output, WikiWash decodes the text by replacing each Unicode character with its corresponding HTML tag. The result is HTML that is now syntactically valid – that is, no tags are broken. However, one more issue remains: the resulting document may have an incorrect structure. To see why this is, let's look at the above example translated back into HTML:

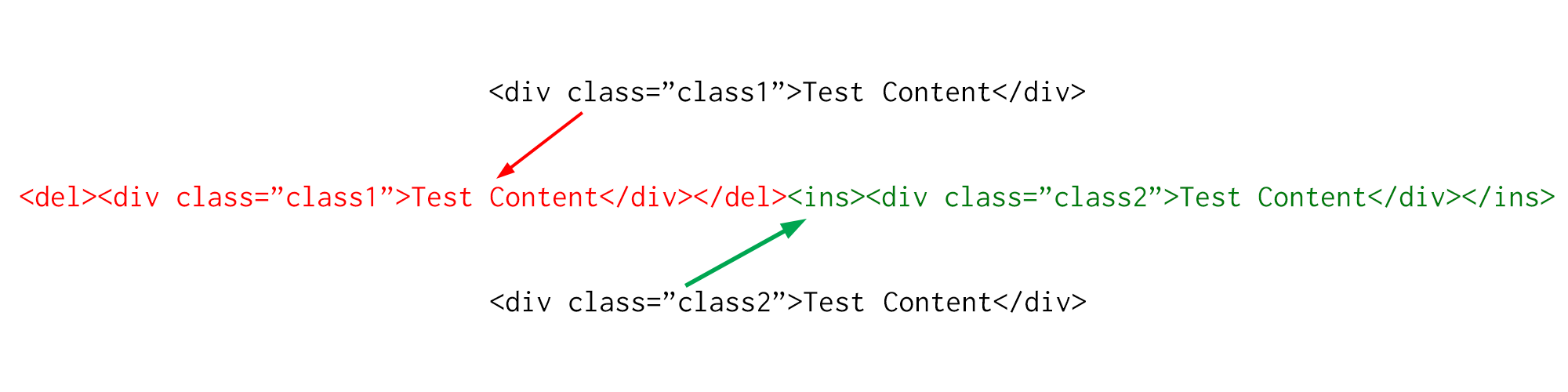

The snippet of HTML in the middle shows that an opening "div" tag with class "class1" was deleted, and that an opening "div" tag with class "class2" was inserted. However, as HTML is a hierarchical or tree-based language, this snippet is invalid. While it's true that the opening "div" tag was replaced, the correct way to represent this would be to include both the start and end of the tag (from the opening "<div" to the closing "</div>") in the difference. This is what WikiWash does – when it detects that a tag's start changed, it finds the entire tag from its open to close and surrounds it with an insertion or deletion tag like so:

This solution doesn't work perfectly—namely, it fails if the HTML that we receive from Wikipedia is malformed, or if the differences that we receive from the diff algorithm are formatted in an unexpected way. However, this solution solves the problem in most of the cases we've tried. (Observant readers might suggest using a tree-based diff algorithm to solve this problem completely. Unfortunately, such algorithms are fairly slow in practice and difficult to implement.)

WikiWash is a complex tool built to solve a very simple problem—viewing changes in Wikipedia, one of the world's largest and most popular websites. These are only some of the technical challenges we've had to overcome to build this tool, and we invite you to fork the project on Github to help us improve it.

Originally posted on The Working Group blog. Reposted via Creative Commons.

Comments are closed.